K8s Networking: Fundamentals.

At its core, Kubernetes has a fairly simple networking model.

To understand this model and its implementation, we have to remember some salient facts about k8s networking:

Salient Fact # 1: Kubernetes expects all pod networking (including pods that reside on the same node or on different nodes) to use the pods real IP address (without any kind of Network Address Translation).

Salient Fact # 2: Kubernetes, the orchestration engine, does not have any out-of-the-binary, networking implementation. Rather it relies on external helper plug-ins to achieve its networking goals.

Salient Fact # 3: Slice it anyway, k8s orchestrates containers (and yes, they are bundled inside pods and yes, we recognize pods are the basic unit of management in a cluster) and therefore can (in fact does) use helpers that implement the Container Networking Interface (CNI) standards.

- Part 1: Introduction to Container Networking

- Part 2: Single Host Bridge Networks

- Part 3: Multi-Host Overlay Networks

- Part 4: Service Discovery and Ingress

Note: Kubernetes and k8s have been used interchangeably throughout and refer to the same thing.

K8s Networking Topologies

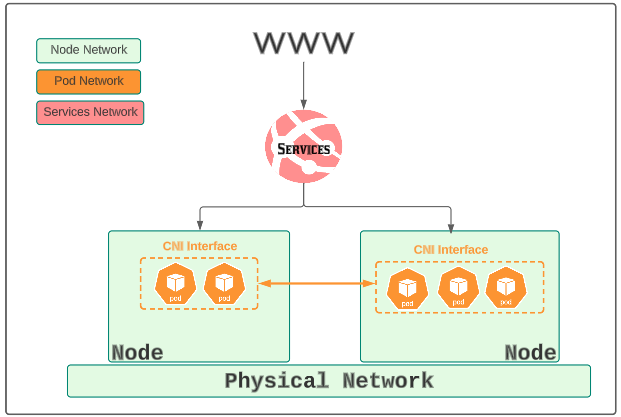

k8s has three types of networking use cases/needs:

- A network of nodes (either in a data centre or in a cloud service provider like AWS, Azure or GCP).

- A network of pods, spread over different nodes. Pod IPs are allocated, typically, via the CNI helper plugin being used. For example, if you use Calico for pod networking support, you will likely be setting up an IP CIDR range of 192.168.0.0/16. Therefore, any Pod started will pick (or rather be given) an IP from this range.

- A network of services, which makes the cluster useable by external consumers (like paying clients).

In this article, we will focus on the networking setup for nodes and pods. For other aspects of k8s networking, read the other articles that are part of this series.

Node & Pods Networking.

Nodes & Pods have three networking needs:

- Pods internal networking (where multiple containers in the same pod communicate with each other)

- Pod to Pod on the same node and

- Pod to Pod on different nodes

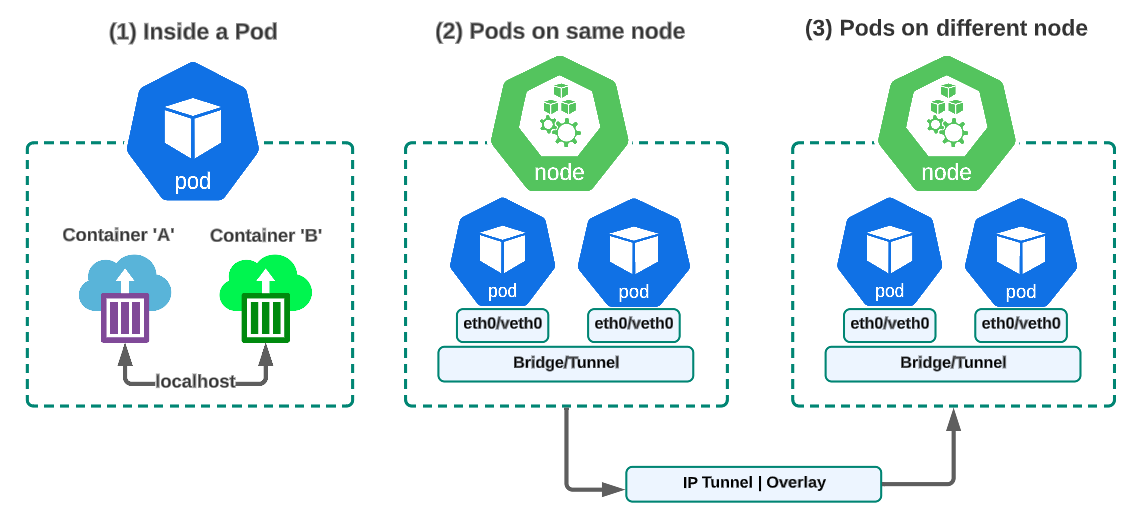

(1) Inside a Pod

Containers running inside a pod are able to pass traffic to each other through the pods localhost interface. Because these containers run within the scope of a singular pod which has one networking namespace, one IP address and port range, they share:

- The same networking namespace

- The same IP address

- The same port ranges

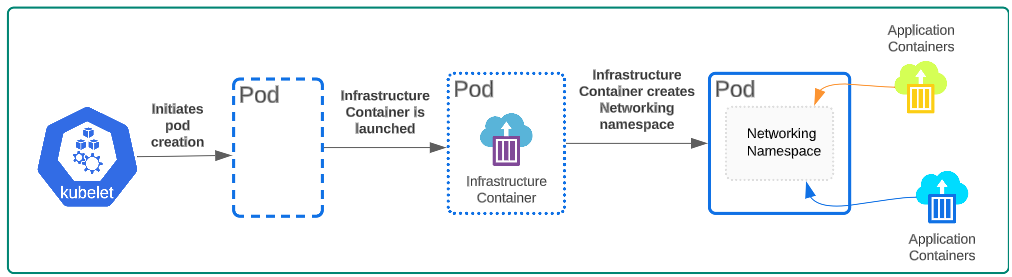

During its 'bootstrapping', a pod will launch an Infrastructure Container which has the responsibility of putting in place all of the pods networking bells and whistles. It creates a networking namespace (i.e. a little virtual space inside the pod that has networking interfaces and other components) which will be used by any application container that is run inside the pod. The lifecycle of an Infrastructure Container is tied to the lifecycle of a pod. When the pod dies, its internal networking set up will also be eliminated.

The Pod itself gets an IP address through which external systems can, transitively, access containers running in a Pod.

What about (2) Pods on the same node and (3) Pods on different nodes?

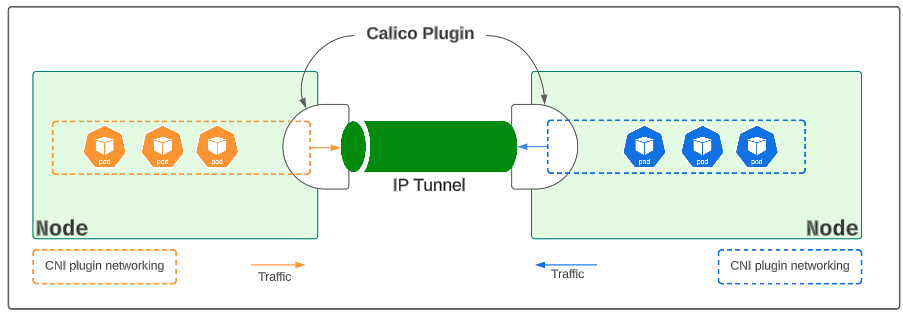

We already know k8s does not have any built-in networking implementations. Rather, it relies on helpers or CNI-inspired plugins (like Calico, Flannel, Weave and others) to establish and manage networking for the cluster.

Let's examine Calico, a CNI standard networking plugin for implementing k8s networking.

Calico (or any other CNI plugin) has to satisfy Salient Fact # 1.

A plugin like Calico satisfies this requirement through the use of IP Tunnels or Bridges.

Like a typical bridge or tunnel in the real world, an IP tunnel has 2 ends, each connected to a node. Whenever a pod(s) on Node A wants to send traffic to a pod(s) on Node B, the IP tunnel takes care of encapsulating the data to be sent, all the while using the real IP addresses for both the source and destination pod(s).

How is Calico (or any other CNI plugin) installed ?

Installed = download the YAML or binary for the plugin, and then configure it to work with the kubernetes cluster.

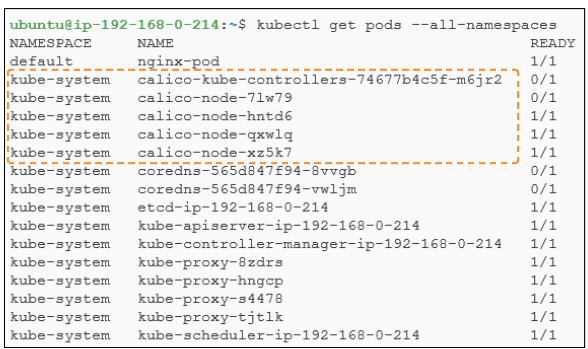

Calico, and other CNI plug-ins are installed in the form of pods that become part of the kubernetes cluster. As shown in this article, once core kubernetes components are configured/installed, a YAML manifest for Calico is executed:

As a result, static DaemonSet pods are deployed on each node in the cluster (in the kube-system namespace).

Enough talk, more demo.

For our deep dive, we will use the 4 node cluster that was created as part of the article K8s Installation: The What's and Why's.

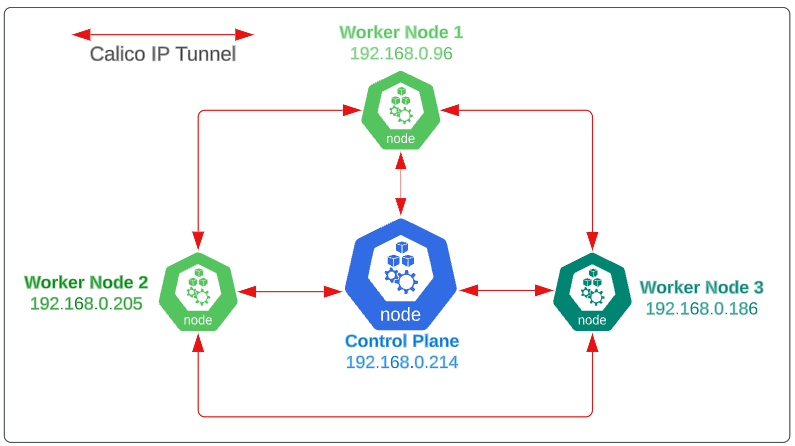

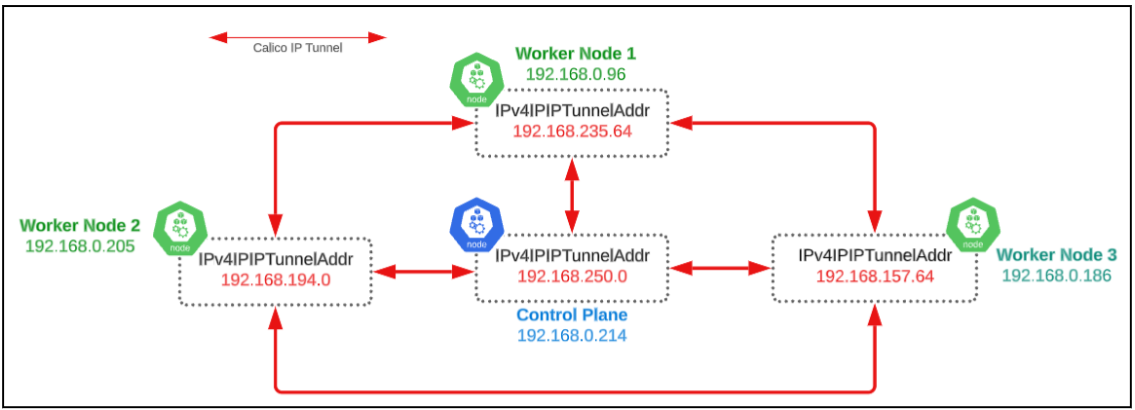

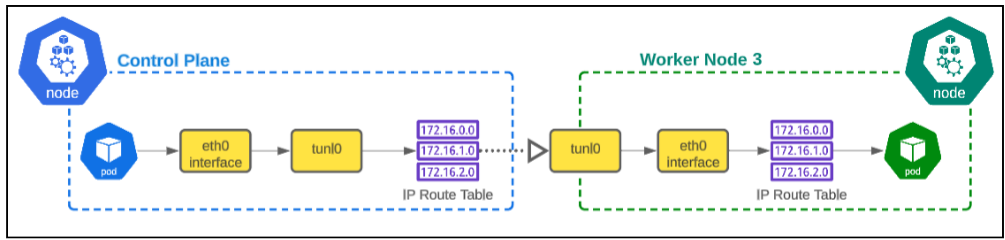

In Figure 7 above, we can see that each node is connected to another through an intermediary IP tunnel.

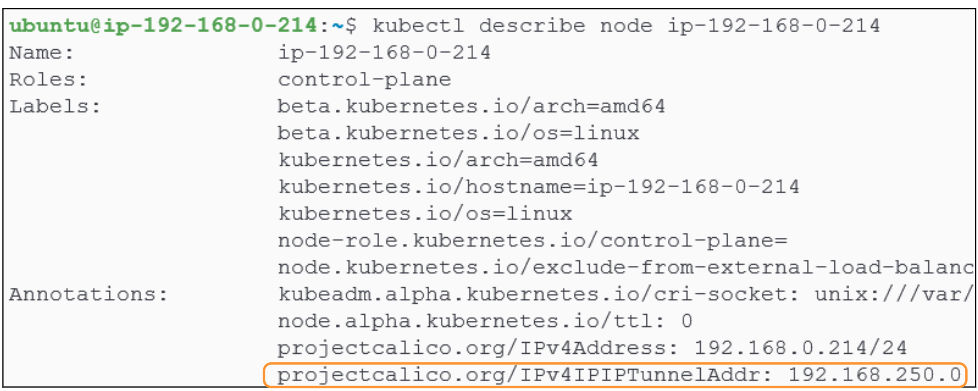

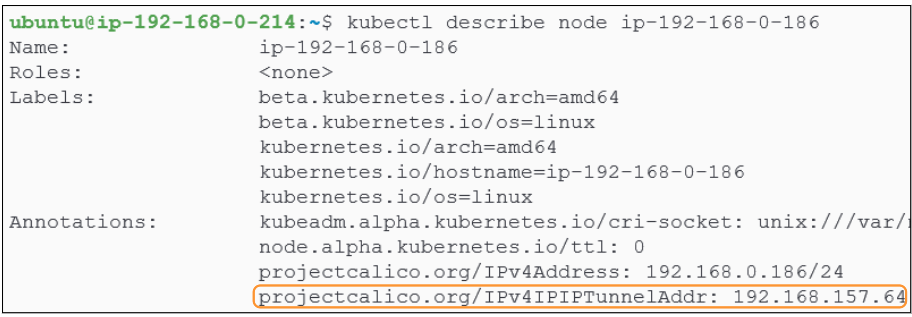

We can look at the nodes networking meta-data using kubectl describe node <name of node> -o wide. In particular, we are interested in checking out the Annotation section for each node.

Describing the Control Plane node, we notice an Annotation titled 'projectcalico.org/IP4IPIPTunnelAddr'. The IP address (@192.168.250.0) shown in Figure 8, is the end of the IP tunnel servicing the Control Plane.

We can also re-run the command to find out the IP address for the end of the IP tunnel that opens up into Worker Node 3 (@192.168.0.186).

If a pod(s) on the Control Plane want to send traffice to a pod(s) on Worker Node 3, the Control Plane pod(s) will send the data packets to their end of the IP tunnel (with IP address of 192.168.250.0). This end will pass on the traffic to the end of the IP tunnel for Worker Node 3 (@192.168.157.64) and eventually to the target pod(s).

The IP tunnel will use a pod(s) and node(s) real IP address, and, therefore, there is no need to perform any kind of Network Address Translations (NAT).

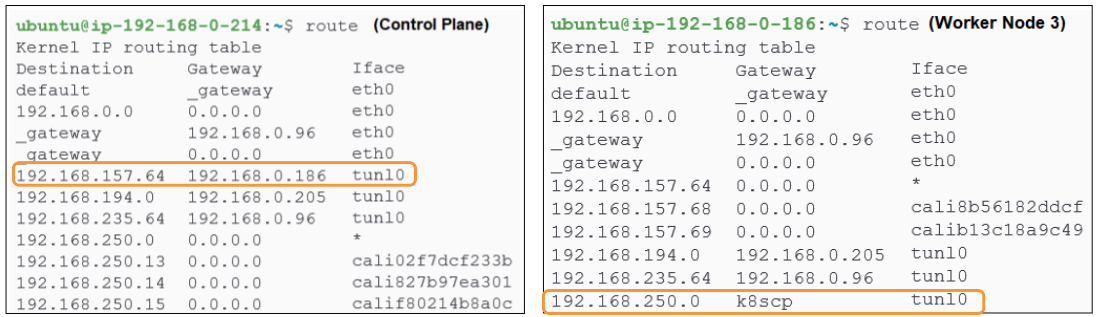

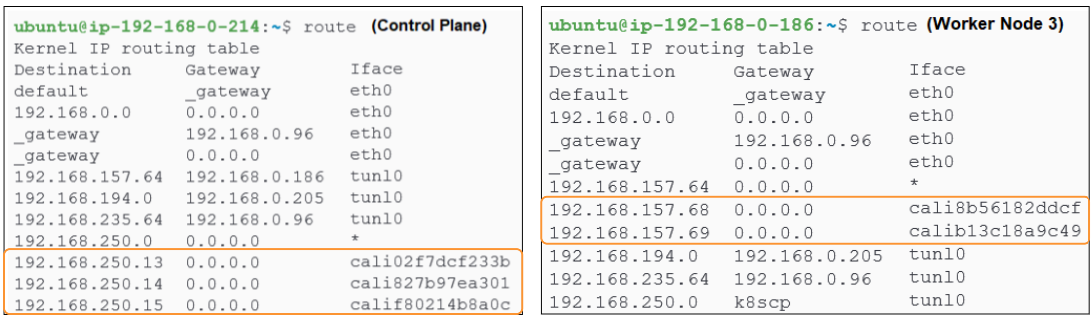

We can also take a look at the OS level IP tables on both the Control Plane and Worker Node 3 to confirm the IP addresses documented.

The image on the left is from the Control Plane while the one on the right is Worker Node 3.

The image on the left could be read as:

Control Plane wants to send traffic to Worker Node 3 (@192.168.0.186) and the gateway (or door) to open is the IP tunnel end point @192.168.157.64 which opens right into Worker Node 3.

Similarly, the right side of Figure 11 shows a situation where:

Worker Node 3 wants to send data to the Control Plane (@192.168.0.214 or k8scp) and the gateway which facilitates this communication between Worker Node 3 and the Control Plane is the end of the IP tunnel (@192.168.250.0) which opens inside the Control Plane.

Pod-2-Pod Networking.

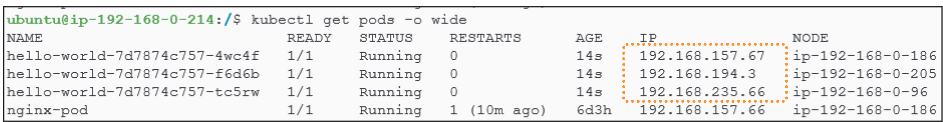

To get a deeper perspective about a clusters pod network in action, let's create a simple deployment (download yaml provided).

The allocation of IP addresses to pods is entirely managed by the CNI plugin (Calico in our case).

As mentioned in the K8s Installation: The What's and Why's, the Calico manifest has a CIDR IP addresses pool to get pod IP addresses from (look at Figure 32 for article K8s Installation: The What's and Why's)

Crack open any one of the pods created using the kubectl command in Figure 13. Of course, you have to replace the name of the pod (hello-world-7d7874c757-f6d6b) with one of yours.

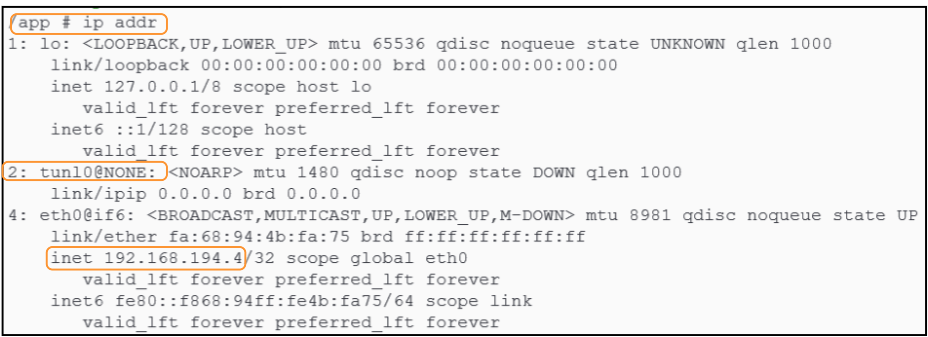

The tunl0@NONE and eth0 (with IP address of 192.168.194.4) are the interfaces that daisy-chain and facilitate traffic ingress or egress for pods.

As shown in Figure 11, traffic flows from a pod inside a node using the tunnel interface and once it gets to the recipient node, the tunnel interface at that end will forward the traffic to the eth0 interface for the pod.

As soon as the tunnel has done its job (that is passed on the traffic to the eth0 interface), Calico will direct the data to one of the pods running on the node using the interfaces that begin with 'cali'.

- k8s requires CNI standard networking plug-ins for establishing and managing a cluster wide network.

- CNI plug-ins like Calico create IP Tunnels between nodes in a cluster and are responsible for establishing the traffic highway between nodes/pods.

- Use route to examine the pathways set between nodes/pods.

Why should I care about this topic?

The information provided here can be used for troubleshooting network configuration errors in a kubernetes cluster.

I write to remember and if in the process, I can help someone learn about Containers, Orchestration (Docker Compose, Kubernetes), GitOps, DevSecOps, VR/AR, Architecture, and Data Management, that is just icing on the cake.