Docker Networking Demystified Part 4: Service Discovery and Ingress.

Learn about allowing access to your containers using Docker Swarm, Service Discovery and Ingress.

- Part 1: Introduction to Container Networking

- Part 2: Single Host Bridge Networks

- Part 3: Multi-Host Overlay Networks

- Part 4: Service Discovery and Ingress

As per Merriam-Webster, ingress is the act of entering. Within the context of a dockerized world, ingress, then, refers to the ability of a Docker Swarm (and its composite clusters) to allow external traffic to successfully reach a container (or containers).

However, before we can talk about ingress, we have to understand the Service Discovery mechanism built into Docker.

What is Service discovery ?

As per wikipedia, Service discovery is the process of automatically detecting devices and services on a computer network. This reduces the need for manual configuration by users and administrators.

Service discovery or container discovery can be difficult. Containers for a service come up, die, get re-spun, go through rolling updates, or patch management more often than we realize. In an environment as volatile as the one described here, the IP address to a container can keep changing and if someone/something is not making sure to update them periodically, you can expect 404's galore.

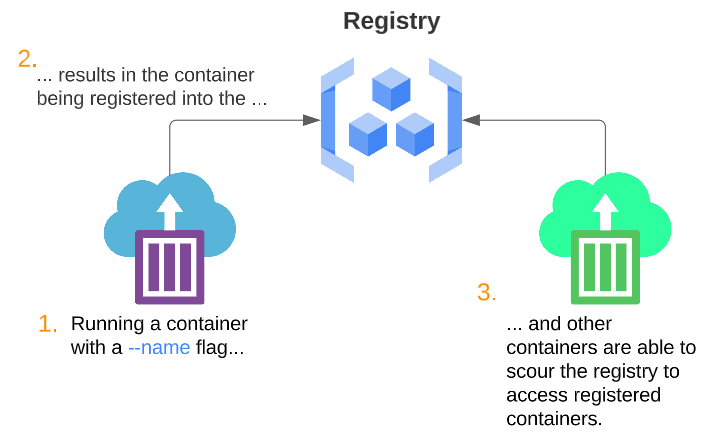

Service discovery: Basic flow

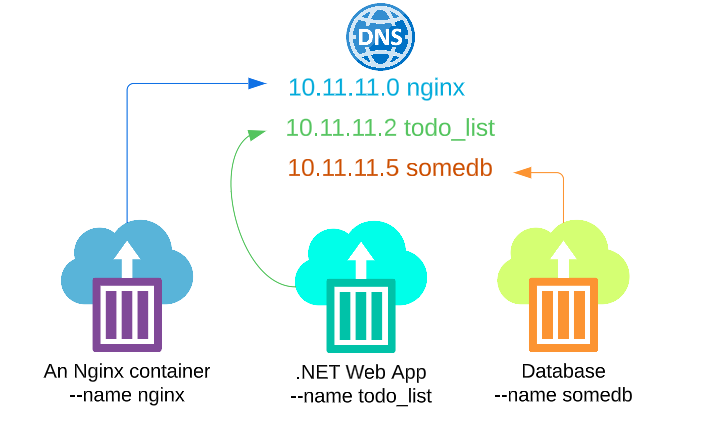

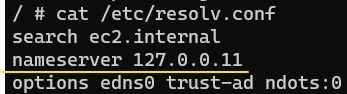

Docker Registry uses DNS technology for service name resolution. Every time a new container is created (as a result of an update to the business logic inside the container or if the container was re-spawned after a crash), the name of the container and its IP is added to the register.

If any container is looking for another container (or service), it will look for the target container (or services) IP based on its name by querying the Registry, receiving a response and making the connection.

Putting our money where our mouths are.

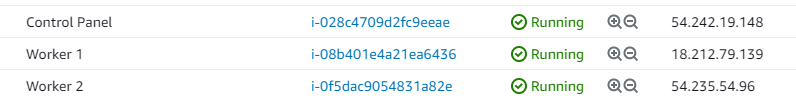

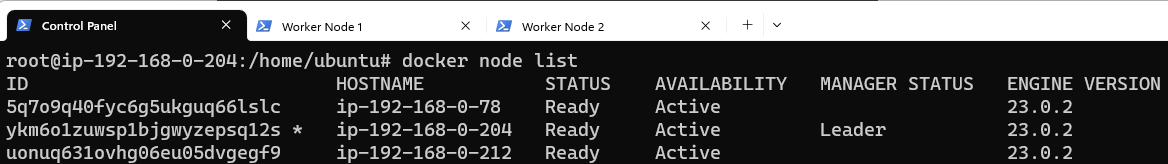

For our hands-on work, I will use 3 EC2 instances:

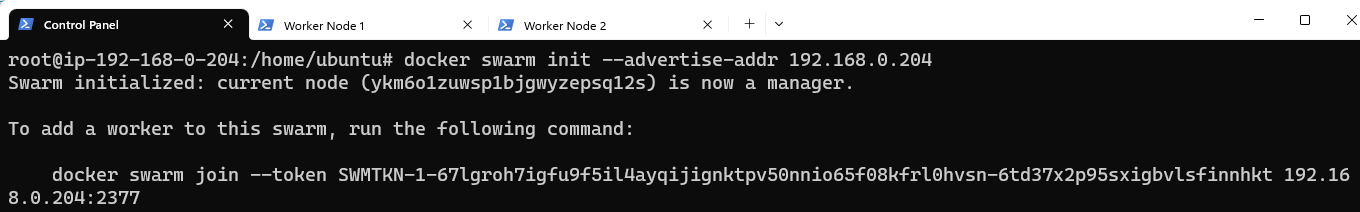

Follow the instructions provided by docker swarm for adding nodes to the cluster.

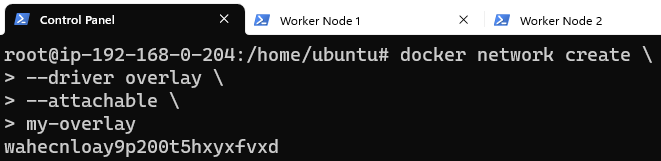

Create an attachable Overlay network (and I will name this network my-overlay):

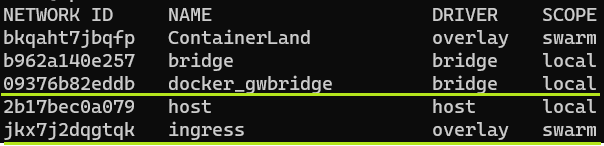

Confirm that the Swarm did indeed get created:

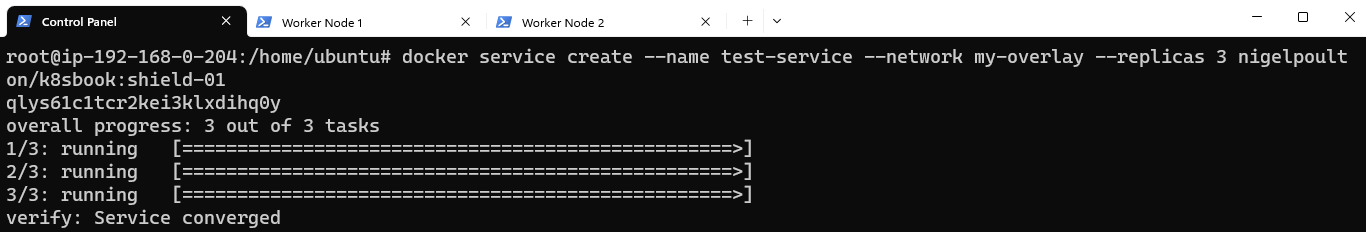

With the Overlay ready, add a service with 3 replicas to it. The replicas will be spread out, one to each node.

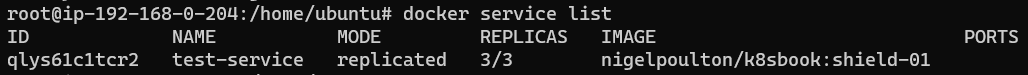

Confirm there are indeed 3 replicas on the Swarm:

As mentioned earlier, the creation of these 3 service replicas MUST have resulted in 3 IPs (one for each service replica) mapped to their names in Dockers Registry.

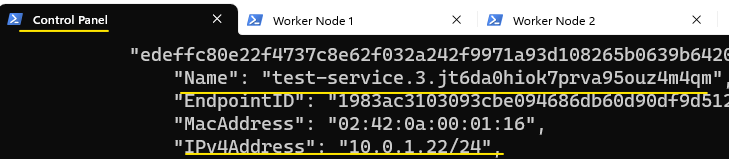

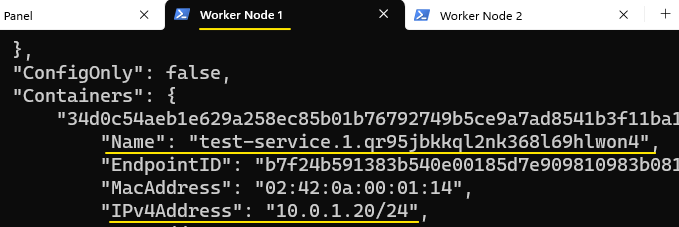

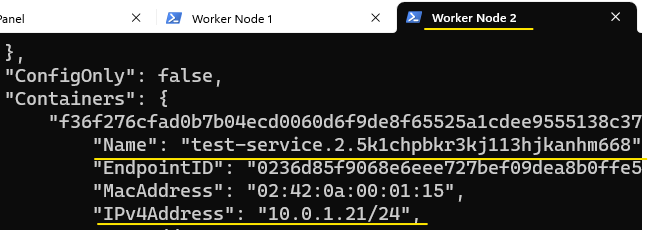

By running docker network inspect my-overlay on each of the 3 nodes, we can get their name and IP (as its been recorded in the Registry):

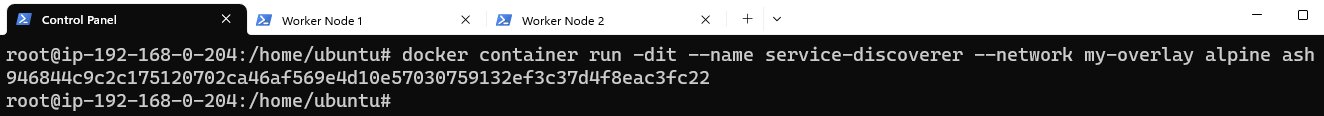

To test Service discovery, we will add a stand-alone container to my-overlay on Control Panel and attempt to access the service/container on one of the nodes:

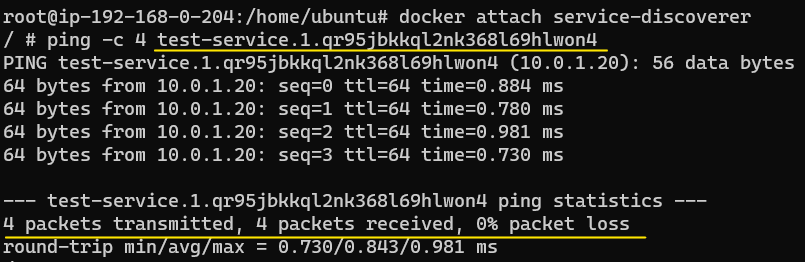

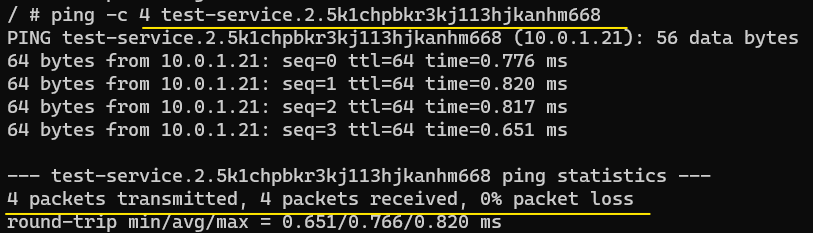

To test name resolution, attach to service-discoverer and ping containers on Worker Node 1 and Worker Node 2 by using their names:

However, the act of pinging single containers by their names is not feasible, especially when there are many replicas in a service and each replica has the kind of long names that Worker Node 1 and Worker Node 2 have. Additionally, if for some reason, the container on either of Worker Node 1 or Worker Node 2 dies, a new one may be spun up, which will have its own IP address (and cryptic name).

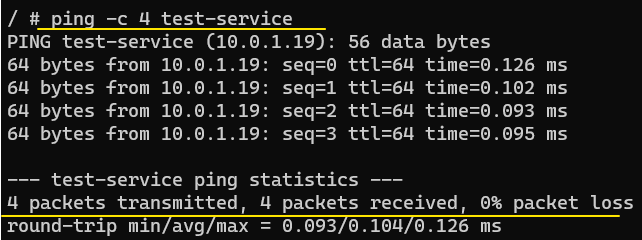

Ping-test the service name as opposed to the service name of each of the replicas.

Finally, ingress networking.

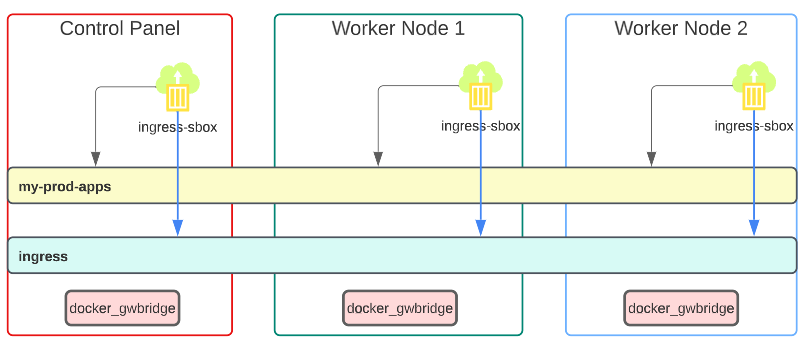

In Part 3: Multi-Host Overlay Network, it was mentioned that an Overlay network called ingress, along with a Bridge called docker_gwbridge, is created automatically when we create a Docker Swarm.

The ingress will span EVERY node/container in the Swarm by default (yup, no discrimination here).

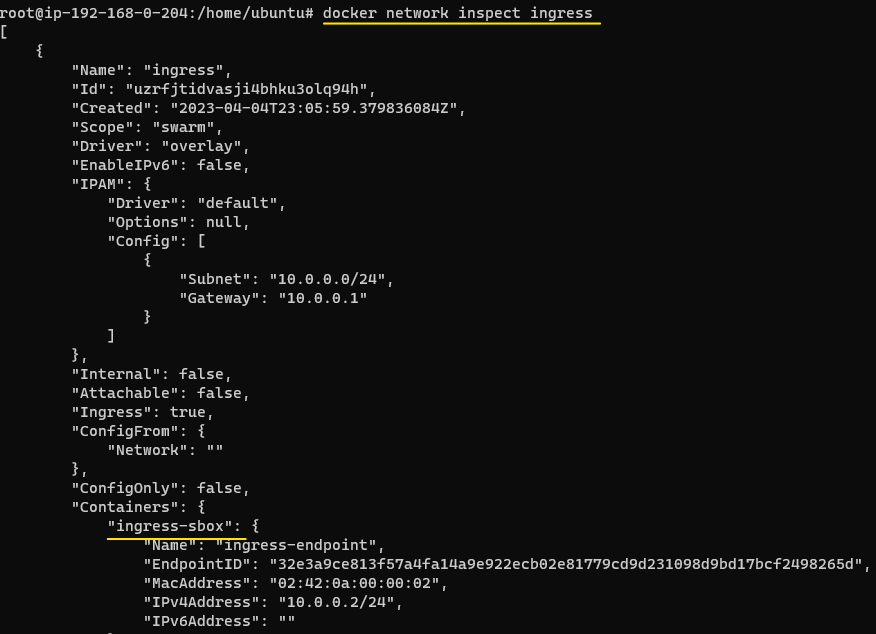

Lets inspect the ingress network quickly:

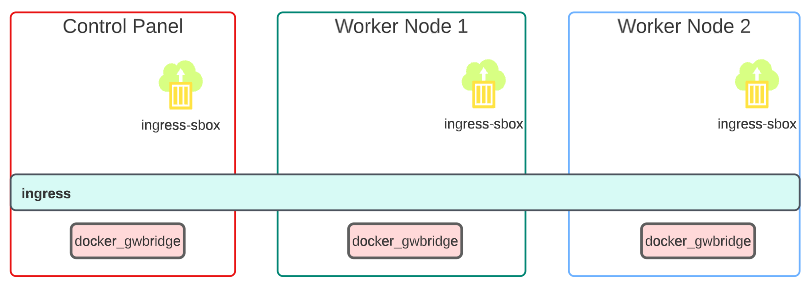

Every ingress network will, as mentioned, create a docker_gwbridge. In addition, each ingress network will also have a container called ingress-sbox which is a part of the routing mechanism in an ingress Overlay.

For purposes of ingress, whenever a packet comes to the cluster, the ingress-sbox along with the docker_gwbridge container will make sure the packet is received by a container in the Swarm.

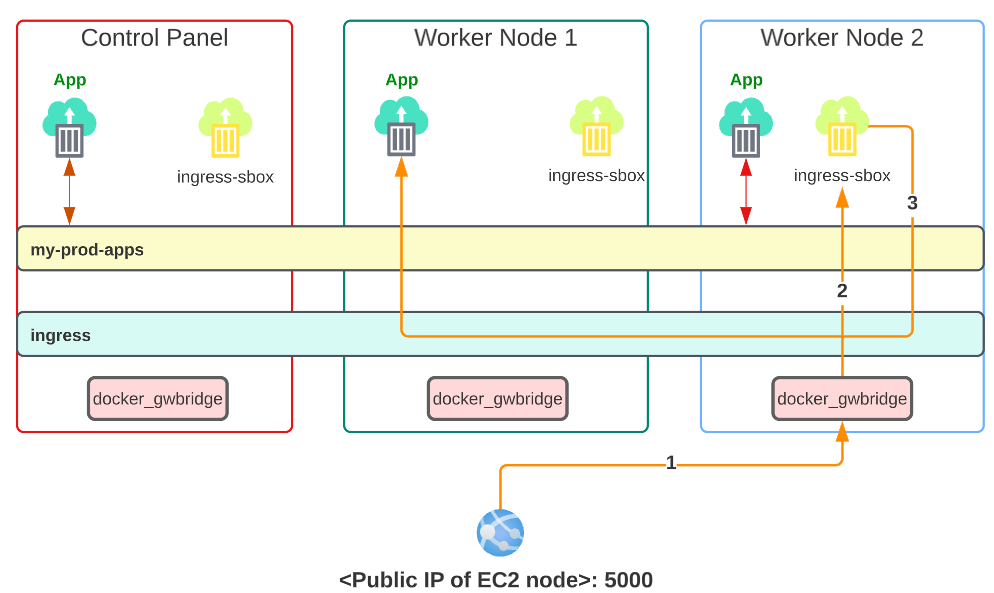

Visually, the network is configured as shown:

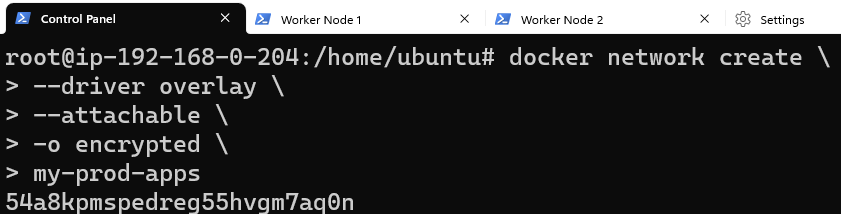

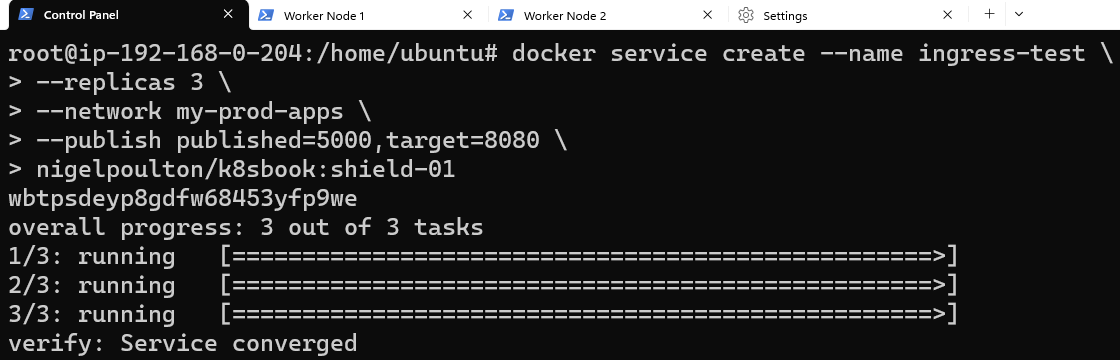

Eating our own dog food, let the ingress Overlay be for traffic ingestion and create a new Overlay for app deployment.

Create a new Overlay and call it my-prod-apps.

Visually, the layout is:

Deploy a replica of 3 services on my-prod-apps:

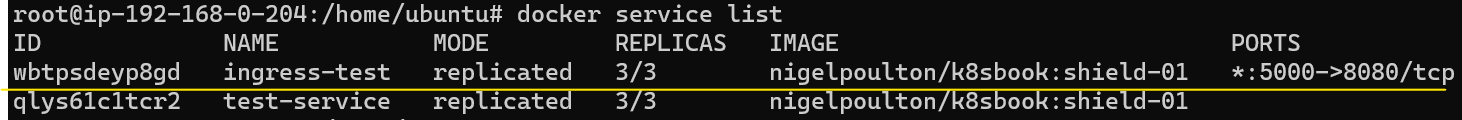

Check the services were deployed properly:

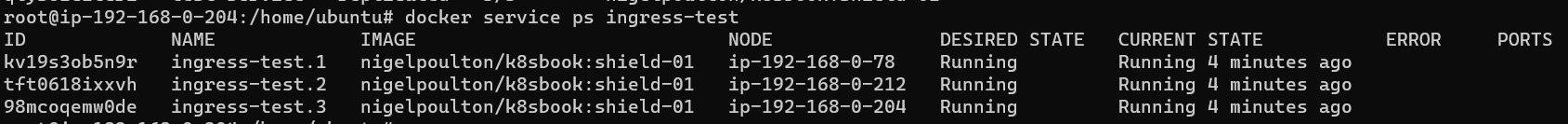

Display some information about the services as well:

Test ingress to confirm the plumbing is all in place.

Open a browser window and type the public IP of ANY one of the EC2 instances followed by port 5000. The web-page inside the App containers will be displayed.

Change the public IP and replace it with the public IP of each of the 2 remaining EC2 instances (followed by 5000).

So what really happened?

When the <public IP>:5000 was called in the browser address bar, a request was sent to the server hosting the containers/services.

- The traffic was passed onto the docker_gwbridge on one of the nodes (in our image, we assume its Worker Node 2 but could have been any one of the three).

2. From the docker_dwbridge, traffic was sent to ingress-sbox which pushed....

3. ... it to the ingress Overlay which carried the traffic all the way to the App on one of the nodes.

- Service Discovery

- ingress Overlay

- User-defined Overlays

- docker_gwbridge and its role in routing traffice

- ingress-sbox and its role in routing traffic

I write to remember and if in the process, I can help someone learn about Containers, Orchestration (Docker Compose, Kubernetes), GitOps, DevSecOps, VR/AR, Architecture, and Data Management, that is just icing on the cake.