Docker Networking Demystified Part 1: Introduction to Container Networking.

- Part 1: Introduction Container Networking

- Part 2: Single Host Bridge Networks

- Part 3: Multi-Host Overlay Networks

- Part 4: Service Discovery and Ingress

Right off the bat, I have a confession to make: I am (and will likely forever be) a network newbie. Routers, DNS, IPAM, Protocol, Encryption, Traffic Forwarding and other assorted (and scary sounding) words from the world of networking have kept me up at nights. I always had the luxury of pawning off any connectivity related concerns to Networking Engineers and their teams, till the world went Cloud-Native and I had to learn about containers (and all that comes along with it) in a jiffy.

A Docker network is a digital twin of a real network.

N-wordN. Begrudgingly, I opened Docker documentation and started reading about its networking model.

I was pleasantly surprised.

Strange as it may sound, learning about Docker's networking model was extremely easy (as compared to setting up the real deal, I am sure). Because the networking model in Docker is managed through the Docker CLI, it was easy to type commands on a terminal, view its impact on the container's networking capabilities, and make fixes quickly.

So what exactly makes up a container networking model?

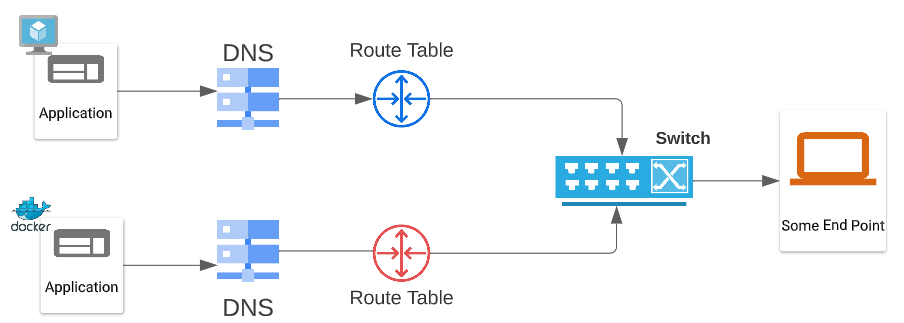

Like a real network, Docker containers have networking components that are called the same things their physical counterparts are.

In fact, one could say that an application that is deployed on a real server (or a VM) does not know the difference between a Docker network vs a real network (reminds me of a movie where the doppelganger goes undetected by the rest of the family for years till one day the partner notices a missing birthmark).

All the app says is "I want to speak to some endpoint" and from that moment on, the connection from the app to "some end point" is set up, managed, and killed by the underlying networking model (be it Docker's or otherwise).

Additionally, container networking should be able to scale up and down in a heartbeat. The last thing we all want in production is to try and connect to a container that is no longer alive. To ensure that this does not happen, Docker has its own IPAM and DNS modules, which are updated as and when required (IP addresses for new containers are added and those for dead containers are removed).

The Container Networking Model (CNM)

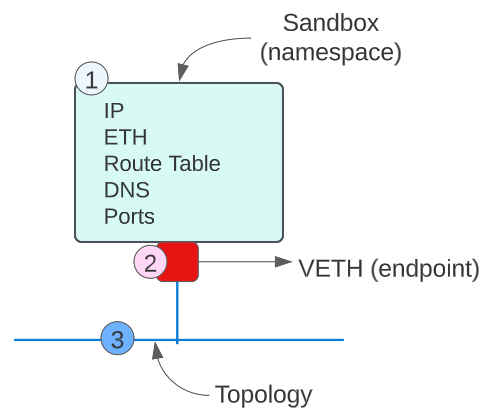

CNM is a design specification and dictates the HOW for container networking. As per this specification, a container network requires at least 3 things to function:

(1) A network namespace (also called a Sandbox) that contains all the basic networking elements like IP address, Route Table, DNS, an ethernet adapter (rather a virtual ethernet adapter), and Ports.

(2) An endpoint (to connect the Sandbox to other Sandboxes).

(3) A network topology* (that determines the scope and reach* of communication).

A network topology is the physical and logical arrangement of nodes and connections in a network. (source: www.techtarget.com)

So how does the above look in a real container network?

[1] None [2] Bridge [3] Overlay [4] Underlay and [5] Host

with Bridge being the default topolgy.

What happens when we install the Docker Daemon and run a container?

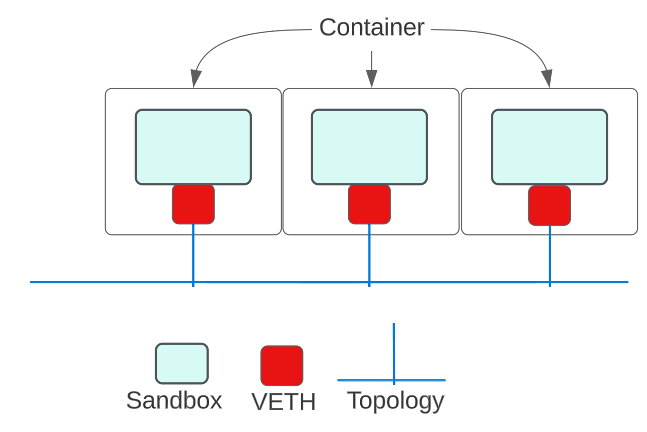

Some very smart individuals took the CNM and converted its specs into a GoLang networking library called LibNetwork. LibNetwork is an open-source container networking library that takes care of the basic plumbing whenever a container is launched.

If we wanted to visualize the steps involved in container networking (in general), we would witness the following sequence of events:

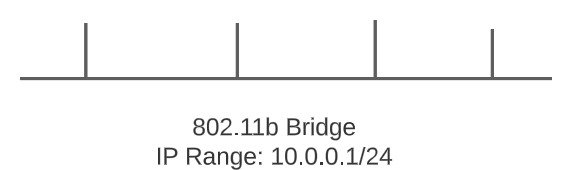

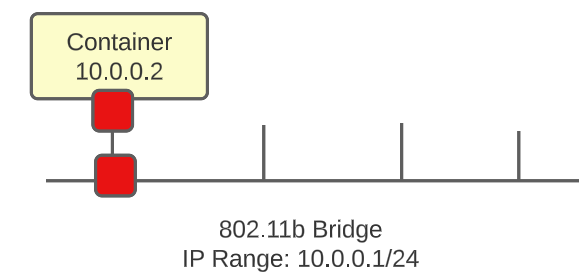

- Docker Daemon will use the LibNetwork module to establish an 802.11b Bridge network (which mimics a Layer 2 switch in the real world). As part of the establishment of this Bridge, a CIDR range is specified (from which come the IP addresses to be assigned to each container).

The 10.0.0.1 IP will be allocated to the virtual router on the Bridge.

2. Now as we add containers to the Bridge, they will get an IP address from the CIDR range AND will connect to the Bridge through their VETH pair:

3. Adding more containers will keep adding VETH pairs and get an IP from the remaining addresses in the CIDR range:

The DNS and Route Tables etc are all taken care of by LibNetwork without us having to worry about their upkeep.

Docker has a Network Management CLI which is VERRRRY useful.

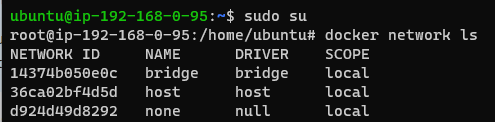

Right after a fresh install of Docker on an Ubuntu instance, you can check the network topologies that come out of the box:

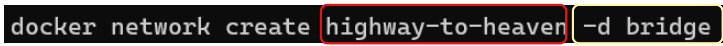

We can also create a custom network using the CLI.

For example, let's create a new Bridge Type Network (or Bridge Topology Network)

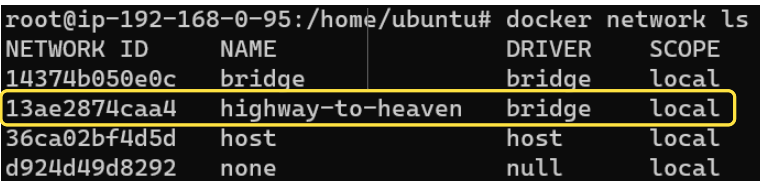

Running the command to list networks will show us that we did end up creating a new Bridge Topology/Type network:

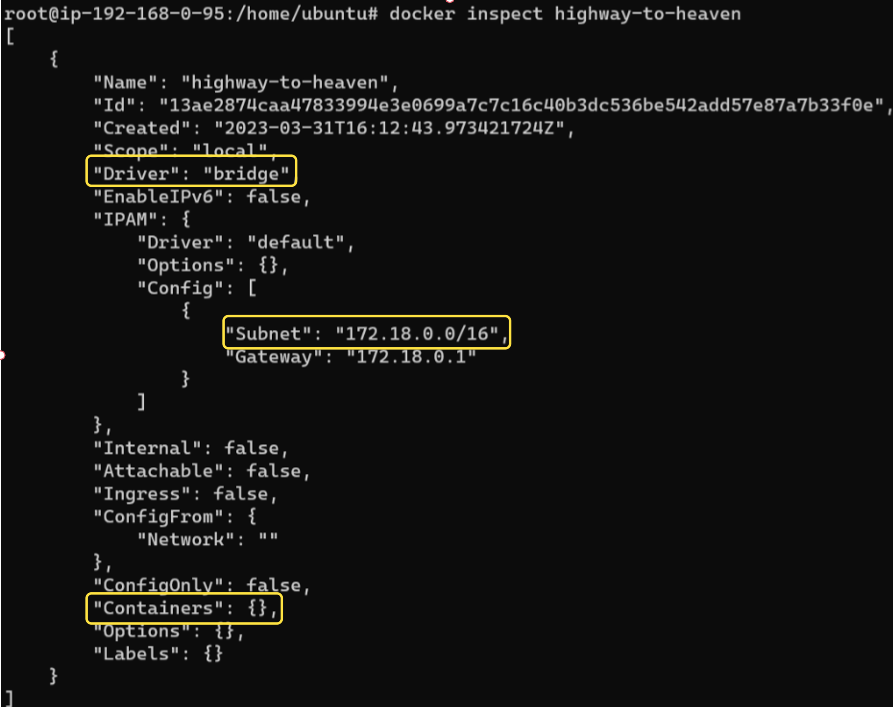

To inspect the metadata for this new network, we can use the inspect command:

There are many interesting attributes that we could discuss but for now, notice the three outlined in yellow:

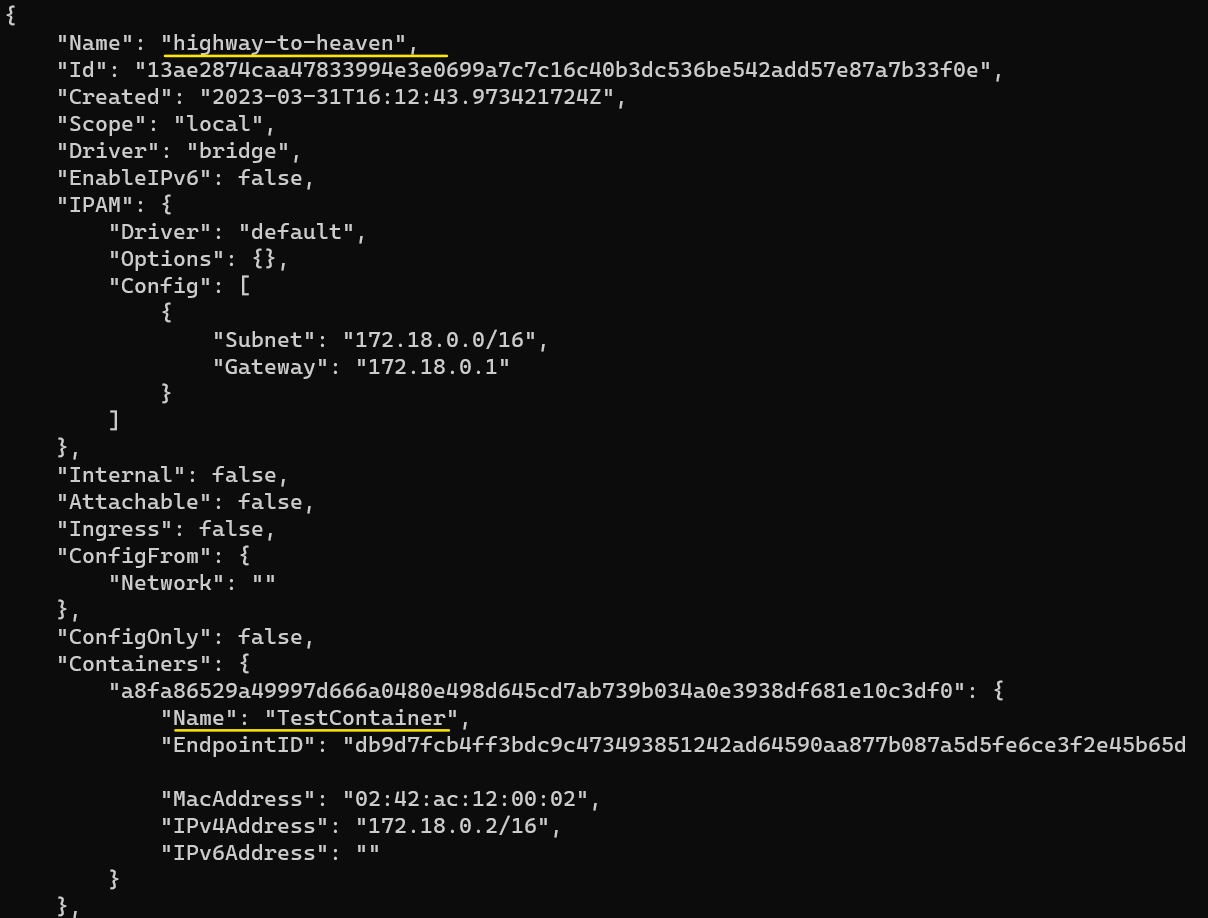

(1) The Driver (-d) flag enabled a Bridge Topology.

(2) The CIDR range given to this network is 172.18.0.0/16.

(3) There are no containers attached to this network ("Containers":{}).

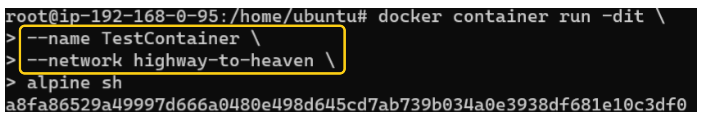

To attach a container to this new network, we can use the following commands:

Running the docker inspect highway-to-heaven command once more shows us that the container (TestContainer) did indeed get attached to our custom Bridge network:

- Container Network Model

- LibNetwork

- Bridge Networks and how to make them using Docker CLI

- Attaching containers to a custom network

In Part 2, we will further explore container networking and its different flavors.

Container On!

I write to remember and if in the process, I can help someone learn about Containers, Orchestration (Docker Compose, Kubernetes), GitOps, DevSecOps, VR/AR, Architecture, and Data Management, that is just icing on the cake.