Thinking about developing an AI Application?

Always start with the 'Why'.

Why do you want to build this application? Will it help you respond to emerging risks to your business, because of competitive pressures, to boost profits and productivity or is it simply FOMO?

Any or all of the reasons mentioned above could become your justification for rolling up your sleeves (or looking for vendors who have already rolled up their sleeves) and marching ahead.

What type of AI capabilities are necessary for your application?

Whether consumers demand AI-ification of products or companies add AI features to their products, there are at least 3 different AI-user interaction modes to consider (and which one is used will determine the kind of AI programming to focus on).

Mode # 1: The importance of the AI feature to the core value proposition of the product/service.

Is the AI feature driving the whole customer experience or just some aspects of it? The former DEMANDS exhaustive testing of the products/services while the latter, though important, will likely not inhibit the user from getting what they need.

Summary: If the AI features are on a product's critical path to value proposition, the stakes are higher and therefore demand rigorous testing.

Mode # 2: Is the AI feature responding to a user's input OR is it a 'proactive' backseat driver?

No one appreciates a backseat driver. They are analogous to Lisa Simpson whom Ned Flanders dubbed "Springfield's answer to questions never asked".

If proactive AI is consistently wrong, it may lead users to start disliking the feature. Therefore, the quality bar for such AI products is EXTREMELY HIGH. Ignore them at your own risk.

With AI features that are activated at the user's behest (e.g., "Siri, show me 9 ways to apply Gorilla Glue to a door frame"), less-than-satisfactory responses can be chalked up to either the user not being proficient with the tool or the conception that "we can't expect AI to be accurate all the time".

Summary: If AI is supposed to lead the user, it better do it right more often than not. The reputational risk in such cases, especially if the products are considered vital for successfully achieving an object, is high.

Mode # 3: How frequently will the AI features "learn and update"?

In the age of personalization, catering to every user's desire is nirvana. As AI product engineers, we have to figure out how often the AI will learn about the user and what this growing intelligence means for the product's stability, accuracy and, of course, the potential to harm the user.

Summary: Cost and risk are both at play here. You can spend more to test more and minimize the risk of harm but that could mean your product is more expensive than the competitors, and may not hit the market before them.

You have an idea, you know it's a blockbuster and you want to develop it yourself. Now what?

"Ladies and gents, start your engines".

Be clear on a 'definition of success' for your product.

"It's going to change the world" is not enough (even irresponsible) as a barometer for a product's success.

In a world where AI's reputation and usefulness are always teetering on the edge between GOOD and EVIL, making sure that customers GWYSTW (Get What You Say They Will) is vital (remember, if the barriers to entry are low for you, they are low for others as well, and any misstep in your promise to the end-user can mean a slow spiralling death kneel for your product).

An AI product mustn't be released before it’s ready.

But how does one know when their dream product is ready?

- Step # 1: Set clear and crisp measures for the product's usefulness, and make sure that all down and upstream dependencies are considered while doing so.

- Step # 2: Measure them as often as possible.

- Step # 3: Don't take a positive measurement as a guarantee of success or a negative one as a sure sign of failure. The numbers tell a story that may be intertwined with other processes, people and events outside your visual periphery.

Be mentally ready for a twisting and winding road to your Elysium.

You may find foundational models that help you get part way to your goal. All you need to do, in such circumstances, is to "pick the remaining tab".

Alternatively, you may be dissuaded with the quality of the foundational models, or may suddenly realize that your team has limited expertise with your chosen starting point. Do you change your team or your starting point? Do you revisit the drawing board or throw your hands up in disgust and push forward because you know a sunk cost fallacy when you see it? Unfortunately, this is one decision even AI may not be able to make for you.

Be aware of AI Product's Last Mile Challenge.

In 2024, an Engineer at LinkedIn published this article.

A brief snippet from this article sheds a strong light on the difference between developing the first versions of an AI product versus scaling it.

The team achieved 80% of the basic experience we were aiming to provide within the first month and then spent an additional four months attempting to surpass 95% completion of our full experience - as we worked diligently to refine, tweak and improve various aspects. We underestimated the challenge of detecting and mitigating hallucinations, as well as the rate at which quality scores improved—initially shooting up, then quickly plateauing.

WOW!

Realize there will be maintenance, enhancements and defect fixes, not to forget cute wish-list items that don't add appreciable value.

The foundation models used may change, the team composition may alter, and user sentiments may point to a direction that was not part of the initial vision.

What should one do when facing a changing tide? Surf your way around it or let it wipe you out?

Unfortunately, there is no easy canned response to these kinds of situations. All one can do is model these kinds of risks in their calculus early on to avoid being VERY shocked when they do come to life.

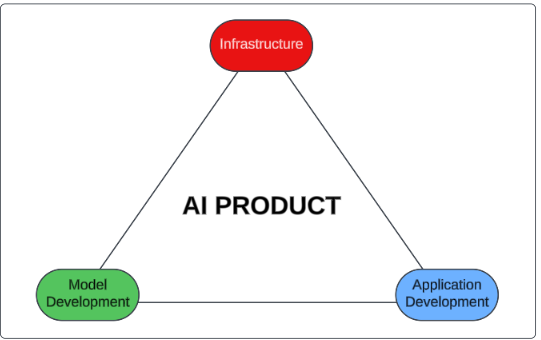

AI Engineering Stack.

It's time to talk tech.

Infrastructure

The bones and connective tissues of any AI Product (or any Data heavy product for that matter) are its infrastructure. Everything from engineering data pipelines to managing compute, data and consumption resources falls under this umbrella.

Model Development

This is where Data Scientists come into their own. This pillar provides tools for model development including training, fine-tuning and optimization. Dataset ingestion, data quality checks and business-rules-driven models come from work completed while developing models.

Application Development

Interaction interfaces that can take input from users, turn their input into well-crafted prompts and deliver a response to them are consumed here. Prompt-Engineering is currently one of the most studied application development steps for engineering AI products.

Subscribe and you will never have FOMO.

I write to remember, and if, in the process, I can help someone learn about Containers, Orchestration (Docker Compose, Kubernetes), GitOps, DevSecOps, VR/AR, Architecture, and Data Management, that is just icing on the cake.