Lambda vs Kappa: A Tale of Two Data Architecture Patterns.

Lambda Architecture is an excellent example of a dual-purpose data architecture, supporting batch and real-time streaming data methods while Kappa was created for real-time data.

Lambda Architecture

A best of both batch and real-time but master of none

Lambda Architecture Handles Both Batch and Real-Time Data

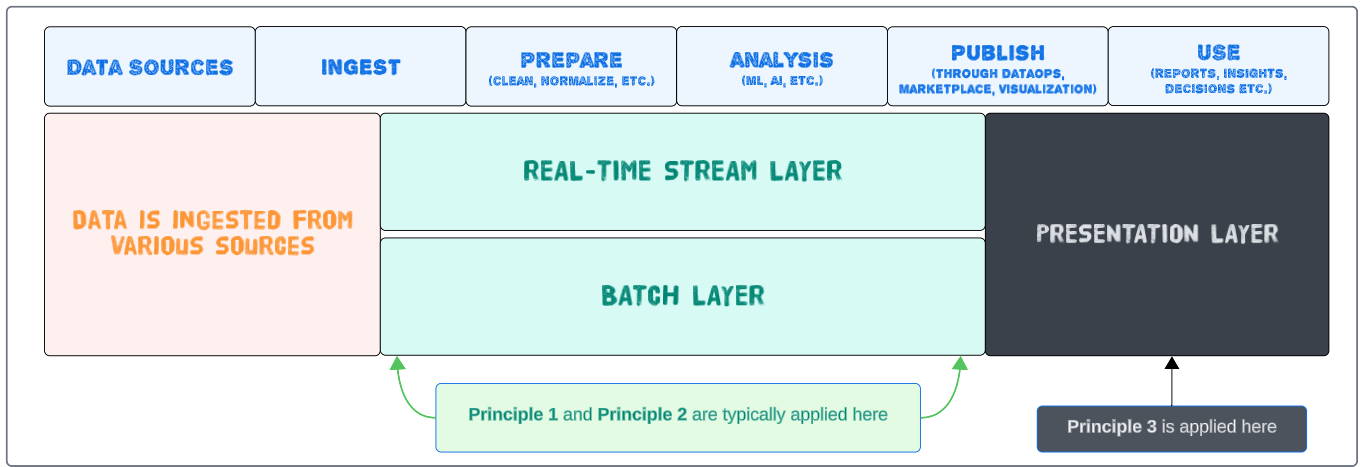

Lambda architecture can be a great design pattern if we are handling massive amounts of data (actually, any data architecture design pattern worth using MUST be able to do this) and want to run some parts of the data as a batch and some as a real-time stream. The concept aims to achieve comprehensive and precise insights into batch data while ensuring a balance between latency, throughput, scaling, and fault tolerance through batch processing. Concurrently, real-time stream processing is leveraged to offer insights into online data sources like IoT devices, Twitter feeds, or computer log files. These two can be integrated before reaching the presentation or serving layer.

The 3 Principles of Lambda Architecture

Principle # 1: Must have dual data models

One data model is for batch processing and the other is for real-time streaming data.

Principle # 2: Must have separate decoupled layers: one for batch processing and the other for real-time streaming.

Keeping batch and real-time layers and their data models separate allows them to be scaled independently.

Principle # 3: Must have a single unified presentation layer

Be it batch or real-time data, in the end, all of the data should be accessible through a single unified presentation layer.

Real-Time Stream Layer

- It allows incremental updating and is technically more complex to set up

- Focuses on low latency over accuracy

- Data quality can be questionable because of the focus on speed

- Data is usually saved in a data lake

Batch Layer

- Considered the single source of truth

- Contains clean data (eventually data in the Real-Time Stream layer will also be cleaned and stored in this layer)

- ETL cleans data and stores it in traditional relational data warehouses (and in data lakes if appropriate)

- This layer is built using a predefined schedule, usually daily or hourly, and includes importing the data stored in the Real-Time Stream layer.

Presentation Layer (or Serving Layer)

- This layer presents a unified view of all the data in both the Batch and Real-Time Stream layers.

- For most accurate data, this layer works with the Batch Layer and for immediate updates, it will get data from the Real-Time Stream layer.

Lambda Architecture's biggest drawback

Lambda Architecture, by design, does not support stateful data processing.

While a useful capability, statefulness can impact a system's ability to scale, reduces fault tolerance and is complex. Lambda architecture-based data platforms process events discretely and generally cannot determine the relationship between these events.

Kappa Architecture

Real Time Streaming is this architecture's sizzle !!

Kappa architecture only worries about real-time streaming data, unlike Lambda with its dual-purpose design.

The 3 Principles of Kappa Architecture

Principle # 1: Real-Time Processing

Events are processed as soon as they are ingested, reducing latency and allowing immediate responses to quickly changing conditions.

Principle # 2: Single Event Stream

Kappa architecture uses a single event stream to store all data that flows through the system. This allows for easy scalability and fault tolerance since the data can be distributed easily across multiple nodes.

Principle # 3: Stateless Processing

All processing is stateless, which means that each event is processed independently, without relying on the state of previous events. This makes system scalability easier since there is no concern about keeping the state across newly added nodes.

Drawbacks of Kappa Architecture

- It is not ideal for ad-hoc queries against the data, because the architecture has been conceptually optimized for real-time streaming (and ad-hoc queries may require to work against a volume of data that is not available).

- It lacks, again conceptually, batch processing capabilities.

- Streaming data architectures are generally more complex than their counterparts.

I write to remember, and if, in the process, I can help someone learn about Containers, Orchestration (Docker Compose, Kubernetes), GitOps, DevSecOps, VR/AR, Architecture, and Data Management, that is just icing on the cake.