K8s Storage: Dynamic Storage Provisioning.

Storage is complex. Pod needs to store a file and the file needs to persist between container restarts, and it needs to be schedulable to new nodes in our cluster. Throw in the dynamism of data needs ("we were fine with 10GB of storage for our cluster but new features have been very popular and we need more space ... and by the way, we don't really have a sense of how much and when") and the question of storage for K8s becomes more complicated (and intriguing). This is the kind of situation where Dynamic Storage Provisioning comes into the lime light.

Table of Contents

- Dynamic Storage Provisioning requires a StorageClass.

- StorageClass, PVC and PV.

- Demo: Dynamic Provisioning, StorageClass and Azure.

Dynamic Storage Provisioning requires a StorageClass.

A what? StorageClass? Never heard of this before. No matter. Read on.

First off, a minor digression.

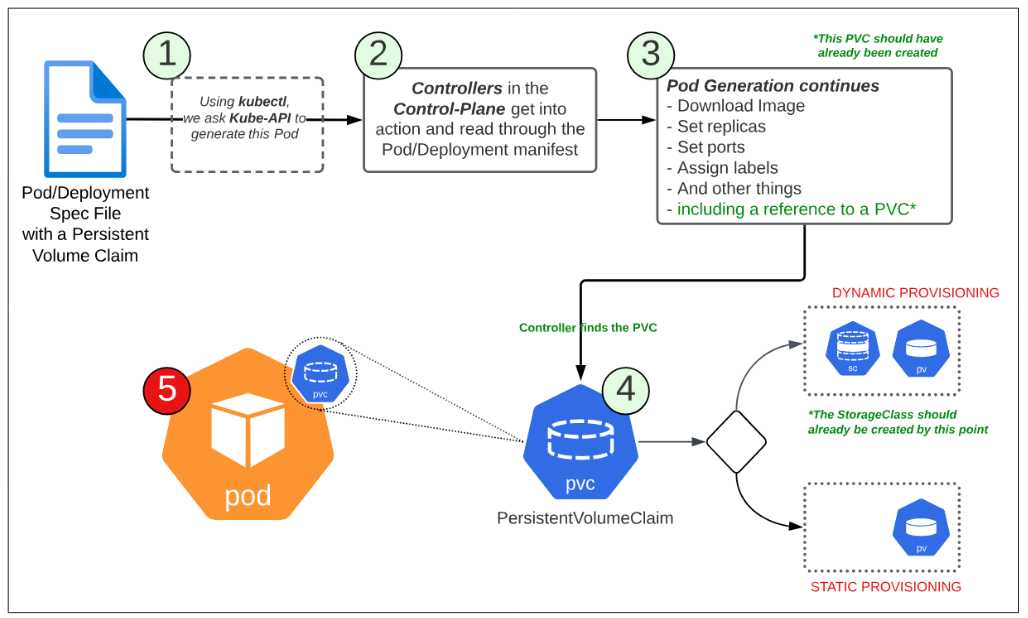

The overall flow of events involved in both Static and Dynamic Storage Provisioning is pretty much the same.

Figure 1 paints a very high level picture of the start to end flow of events when a Pod is initiated (but since this article is about Storage, all other aspects of Pod creation are being ignored). At step 4, the mentioned PVC inside the Pod spec will be attached to the Pod, thereby making the storage logic belonging to the PVC, indirectly become the storage approach adopted by the Pod.

Now back to the question we need to answer. What is a Storage Class?

- A StorageClass is a native K8s object (read more about it on K8s Official Documentation) and enables sophisticated storage semantics to be specified in a declarative way.

- Storage Classes and Dynamic Storage Provisioning are a powerful combo for enabling high performance workloads (e.g. a simple web app, a machine learning model etc) to operate successfully.

- StorageClass gives application developers a way to get a volume without knowing exactly how it is implemented. It gives applications a way to request a PVC without knowing exactly which type of PersistentVolume is being used under the hood.

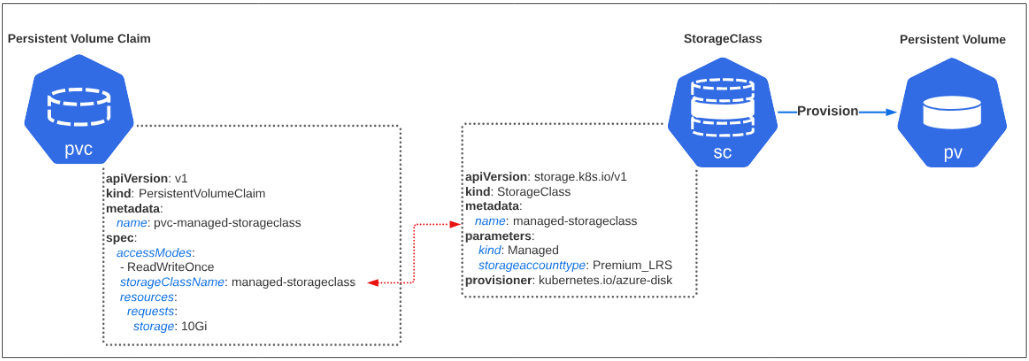

How are StorageClass, PVC and PV related?

*PVC = Persistent Volume Claim, PV = Persistent Volume

Demo: Dynamic Provisioning, StorageClass and Azure.

- Azure EKS instance

- kubectl (installed locally)

Step 1: Get a list of StorageClass types available on Azure.

Salient points:

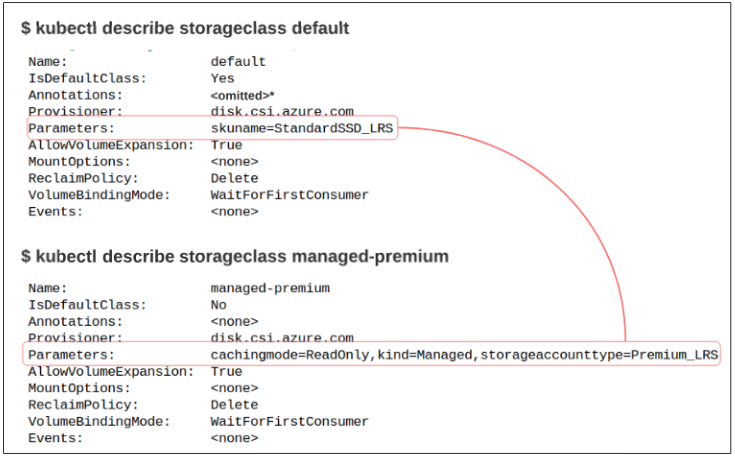

- There are 9 different StorageClass types in Azure EKS including default and managed (i.e. the one shown in the StorageClass manifest in Figure 2).

Similarly, like candy bars, each StorageClass shown in Figure 3 has a different profile for performance, cost, scalability etc.

- The RECLAIMPOLICY is Delete for all of them.

On a tangent: Let's do a side-by-side comparison of the default (default) and managed-premium StorageClass in Figure 3.

The default StorageClass is a Standard Solid State Disk with Locally Redundant Storage while managed-premium is of type Premium with Locally Redundant Storage (and likely more expensive and performant than the default type).

Step 2: Create a PersistentVolumeClaim.

Before we create the Pod that hosts the PVC, lets create the PVC itself.

The manifest for the PVC is detailed below:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-azure

spec:

accessModes:

- ReadWriteOnce

storageClassName: managed-premium

resources:

requests:

storage: 10GiAs per this manifest, we are making a PersistentVolumeClaim which:

- Has name set to pvc-azure-managed-premium

- Allows a ReadWriteOnce access

- Is asking for Azure EKS to use a managed-premium StorageClass for the provisioning

- Requires 10GB of storage space

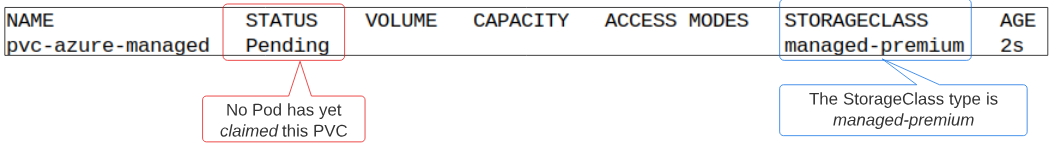

Using $ kubectl create -f pvc-azure.yaml, we will create an instance of a PVC (called pvc-azure-managed-premium). We can eye ball the successful creation of this PVC by listing all of them using $ kubectl get pvc.

Step 3: Deploy a Pod that claims this PVC.

The manifest for the Deployment is detailed below:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-azure-deployment

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

volumes:

- name: myservercontent

persistentVolumeClaim:

claimName: pvc-azure

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: myservercontent

mountPath: "/usr/share/nginx/html/web-app"As per the Deployment, the expected state once it's complete should resemble the diagram below:

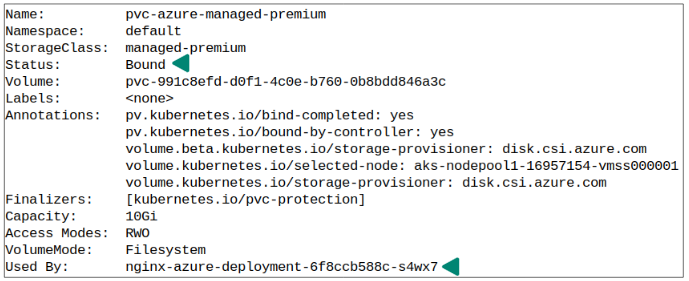

Create the Deployment (using $ kubectl create -f nginx-on-azure.yaml) and check if the PVC has changed state from PENDING to BOUND.

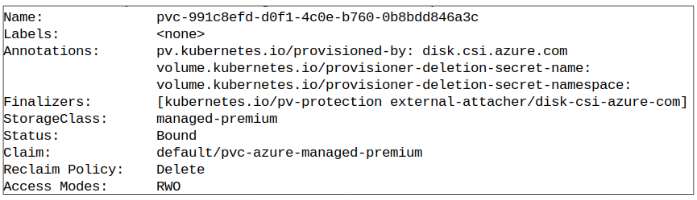

Step 4: Confirm the PersistentVolume has also been created.

Using $ kubectl get pv and then using the name of the PV for Azure EKS, type $ kubectl describe pv <name of PV>.

Test if PVC is actually holding onto data from the container.

The proof of the pie is in its eating. The tall claims made about K8s storage so far must be put to a test. Unfortunately there is no easy way of peeking into a PVC to confirm its contents. There is a work around however.

We can spin up a simple Pod and mount the PVC (pvc-azure-managed-premium) into a volume backed by a local folder. Once that is done, we can check the local folder to confirm what ever was saved in the PVC was dumped into it and if that is indeed true, our work is finished (metaphorically of course).

Save a file in the mounted directory for the container

# exec into the running Pod

kubectl exec -it <name of Pod> -- /bin/sh

# Navigate to the directory that is mounted for the container

cd /usr/share/nginx/html/web-app

#Save a file

echo "This is a test" > test.txtGenerate a simple Pod

apiVersion: v1

kind: Pod

metadata:

name: pvc-inspector

spec:

containers:

- image: busybox

name: pvc-inspector

command: ["tail"]

args: ["-f", "/dev/null"]

volumeMounts:

- mountPath: /pvc

name: pvc-mount

volumes:

- name: pvc-mount

persistentVolumeClaim:

claimName: pvc-azureExec into pvc-inspector

kubectl exec -it pvc-inspector -- sh

Look for the /pvc directory and check if the contents saved in the /usr/share/nginx/html/web-app are also visible in /pvc.

I write to remember and if in the process, I can help someone learn about Containers, Orchestration (Docker Compose, Kubernetes), GitOps, DevSecOps, VR/AR, Architecture, and Data Management, that is just icing on the cake.