K8s Security: Restricting Intra-Network Traffic Between Pods.

We know that the Kubernetes networking model demands all pods are able to communicate with each other without additional configurations.

However, there may be reasons why letting pods talk to each other may be considered unnecessary and even insecure. In such cases, we can restrict the flow of traffic between pods using Network Policies.

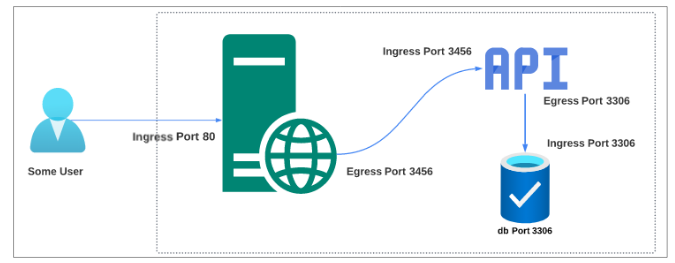

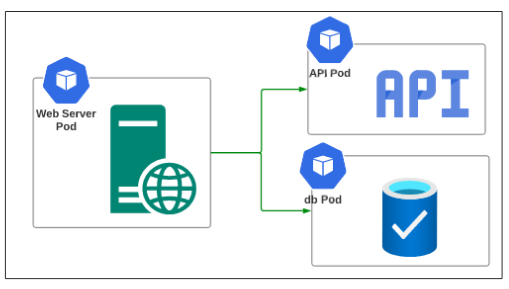

Before we dive deeper into Network Policies and what they can help us do, let's sketch out a simple example showing traffic flow inside a cluster consisting of 3 pods: a web server, an API, and a database.

Notice the duality of the ports (especially for the server and the API). What the web server considers an Egress Port (3456) is the Ingress Port for the API. Similarly, the Egress Port 3306 is the Ingress Port for the database. To achieve this kind of communication inside a cluster, all the pods need to be in the SAME VIRTUAL PRIVATE NETWORK. Kubernetes does this by using CNI Plugins like Calico, Cilium, and Flannel.

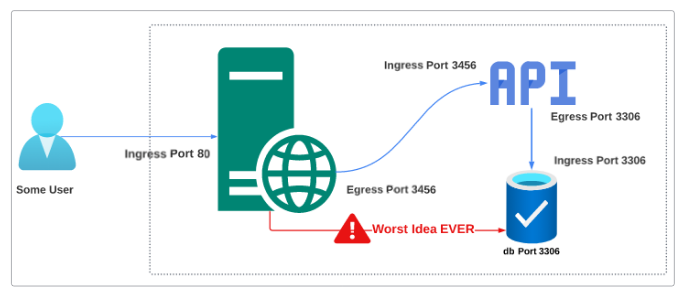

Since all the pods in a cluster need to be in the same VPN, it would make sense to assume that the web server can DIRECTLY connect to the database, without going through the API. Bypassing the API certainly adds an element of 'better performance' but it also opens a Pandora's box of vulnerabilities. If the web server is hacked and it can connect to the database directly, one does not need to exercise their imagination to get a sense of what that means for the database's security (and by transitivity, users' data security).

Enter Network Policies.

Network Policies are a Kubernetes resource, applied at the namespace level, that controls the traffic between pods and/or network endpoints. By default, Kubernetes allows communication between all pods, and Network Policies are used to create constraint-based traffic routing, at the pod and/or port level.

Of course, the proof of the pie is in the eating and the upcoming demo will provide further clarifications.

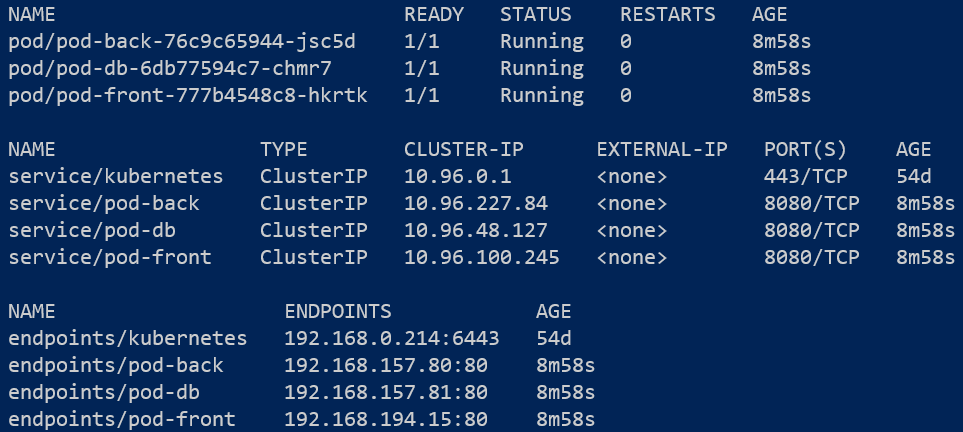

Expanding on the example illustrated in Figure 2, let's set up 3 deployments including services:

- A front-end pod (and associated service) that acts like the webserver.

- A back-end pod (and associated service) that acts like the middle tier/business logic layer/backend.

- A database tier pod (and associated service) that mimics the database.

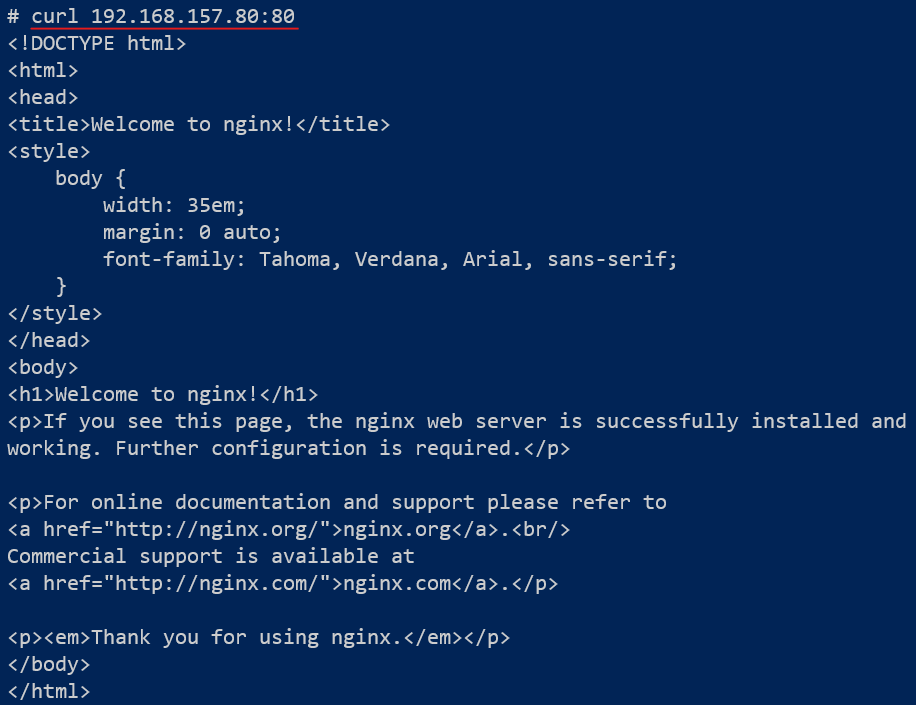

Let's confirm the hypothesis that by default, all pods are able to talk to all other pods. To do this, we can go inside each pod and attempt to send a curl message to the other 2 pods.

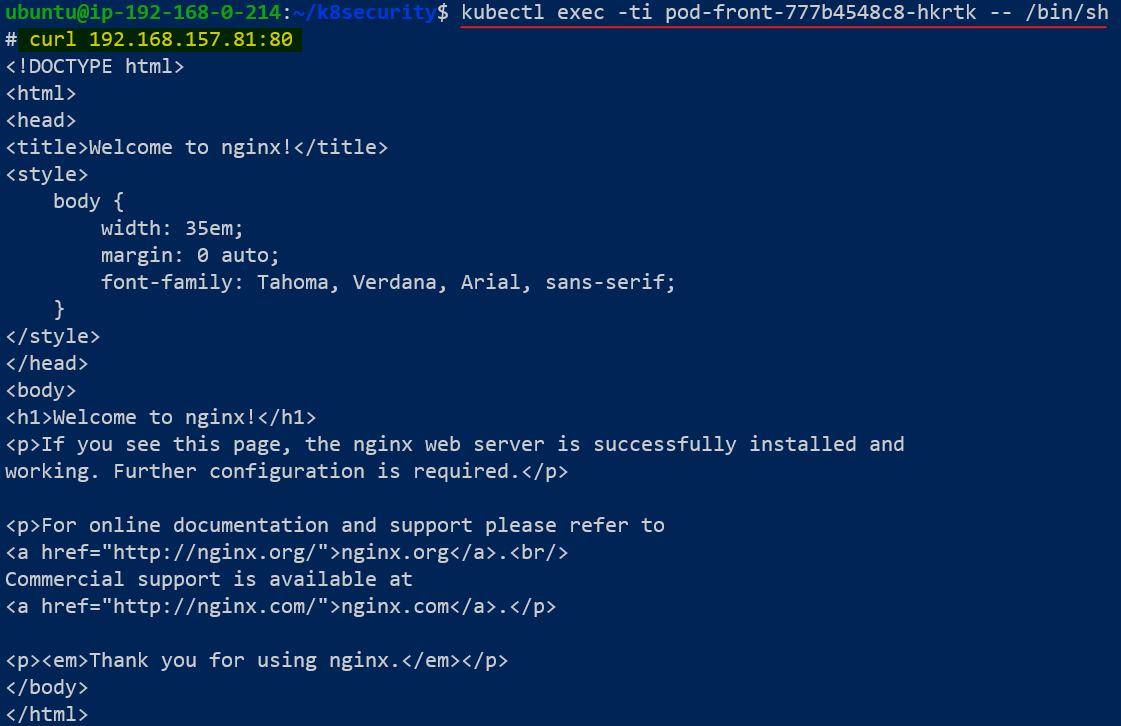

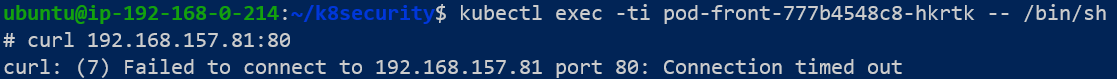

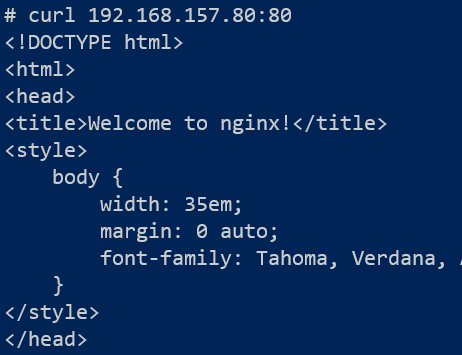

Confirming pod-front can connect to pod-back and pod-db

- Use kubectl exec -ti -<name of front end pod> (dash)(dash) /bin/sh to go inside the front end pod and send a curl command to pod-db

- For the sake of confirmation that the default pod-to-pod communication is unrestricted, attempt to send a curl message to pod-back from pod-front.

The outcomes shown in Figure 4 and 5 are a clear indication that the database is easily accessible to the web server pod (pod-front). Any malicious intent that is able to break through the web server's security is primed to get into the database.

A simple visualization of this openness of the pods with each other can be done by understanding Figure 6 below.

Creating a Network Policy that will NOT allow the Web Server Pod to successfully send any traffic to the db pod

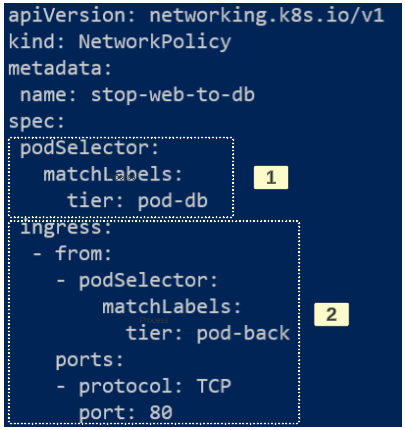

Before we create the manifest for this Network Policy, let's look at its YAML structure:

[1]: In simple English, the section is identifying the pod for which this Network Policy is applicable. The labels applied to the pod-db in its deployment manifest clearly showed we were labeling it with "tier=pod-db".

[2]: This section of the manifest clarifies WHICH pod is allowed to send traffic to pod-db. The podSelector attribute shows "app: pod-back" and only on port 80.

There is no mention of pod-front in the ingress rules section and therefore, in theory, at least, we have snipped the wires between pod-front and pod-db.

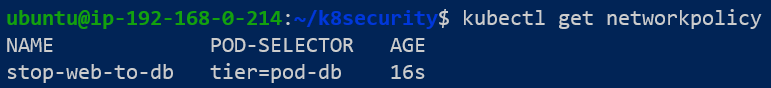

Using kubectl apply -f stop-web-to-db.yaml, impose the proposed Network Policy to the cluster.

Confirming the policy was created is one thing but does it really impose the restrictions that we want it to? For this confirmation, we can

- Go back into pod-front using kubectl exec -ti <name of front-end pod> (dash)(dash) /bin/sh

- We can issue a curl command against pod-db's endpoint IP address.

We will also attempt to send a curl command from pod-front to pod-back.

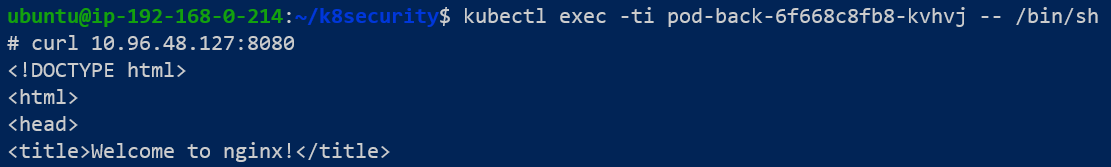

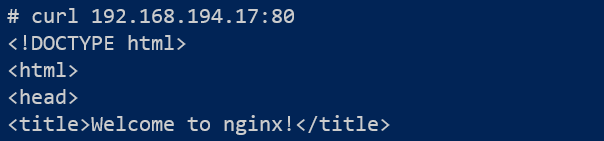

The outcomes shown in Figure 9 and Figure 10 confirm the Network Policy is stopping pod-front from accessing pod-db.

As part of the Network Policy, we explicitly demanded ingress to pod-db from pod-back at port 80.

We can confirm this will be successful by going inside pod-back and sending a curl to pod-db and for laughs, to pod-front as well.

- By default, every pod in the cluster is able to speak with any other pod.

- Network Policies can be used to override this default behaviour and restrict pod-to-pod communication.

I write to remember and if in the process, I can help someone learn about Containers, Orchestration (Docker Compose, Kubernetes), GitOps, DevSecOps, VR/AR, Architecture, and Data Management, that is just icing on the cake.