K8s Scheduling: Taints and Tolerations.

Just like Anti-Affinity rules, Taints and Tolerations can be used to repel Pods from being deployed on a certain Node.

The Control Plane Node is a prime example where Taints and Tolerations don't allow Pods to be scheduled on it.

Table of Contents

- Mechanisms to influence Pod deployment

- Taints and Tolerations

- Control Plane and its Taints

- Demo: Pod Placement using Taints and Tolerations

Mechanisms to influence Pod deployment

K8s has at least 5 different ways of controlling where Pods get scheduled:

- Node Selectors

- Affinity and Anti-Affinity

- Taints and Tolerations

- Node Cordoning

- Manual Scheduling

Taints and Tolerations

Taints are used to control which Pods are scheduled to Nodes while Tolerations allow a Pod to ignore a Taint and be scheduled as normal on Tainted Nodes.

Taints are applied to a Node while Tolerations, like Affinity/Anti-Affinity rules are applied to the Pod. Simply put, a Pod will not be scheduled on a Node whose Taints are unacceptable to it, UNLESS, the Pod has a Toleration in its spec which makes the Node's Taint bearable.

Control Plane and its Taints

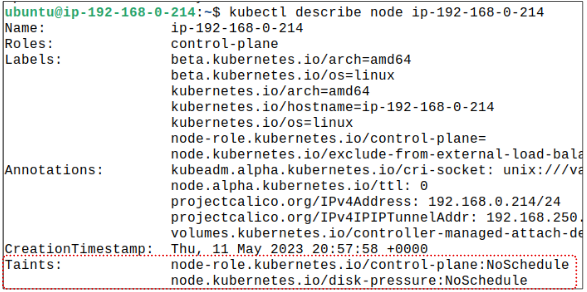

By default, user Pods are not deployed on the Control Plane (user Pods = Pods with user application containers). This can be easily confirmed by using the kubectl describe command to look at a Control Planes metadata.

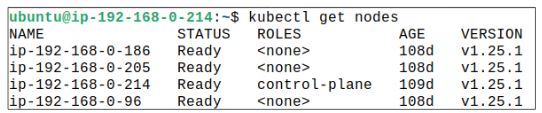

For this article, we are using a 4 Node cluster with 1 Control Plane and 3 Worker Nodes.

Looking at the Control Planes metadata (using kubectl describe node ip-192-168-0-214), we see:

The NoSchedule taints stop any Pod without the overriding Toleration in its spec to get placed on the Control Plane.

Demo: Pod Placement using Taints and Tolerations

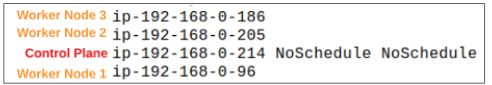

Assume we have a 4 Node cluster: 1 Control Plane Node and 3 Worker Nodes.

| Node Type | IP |

| Control Plane | 192.168.0.214 |

| Worker Node 1 | 192.168.0.96 |

| Worker Node 2 | 192.168.0.205 |

| Worker Node 3 | 192.168.0.186 |

Step 1: Check the Taints on each of the Worker Nodes.

The Taint on each Worker Node can be found using:

kubectl get nodes -o jsonpath="{range .items[*]}{.metadata.name} {.spec.taints[?(@.effect=='NoSchedule')].effect}{"\n"}{end}"

Step 2: Apply a NoSchedule Taint on Worker Node 3.

Taint on Worker Node 3 can be applied using:

kubectl taint nodes ip-192-168-0-186 key=MyTaint:NoSchedule

If we ran the kubectl command from Step 1, we will see that Worker Node 3 has a NoSchedule Taint on it.

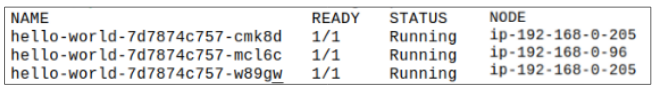

Step 3: Deploy the manifest for a simple 3 replica hello-world Pod.

It was mentioned earlier that if a Pod has a Toleration that can accept the Taint on a Node, then that Pod will be deployed on the Node.

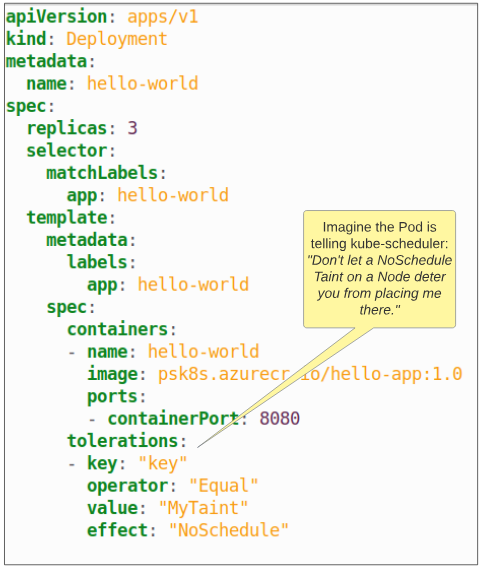

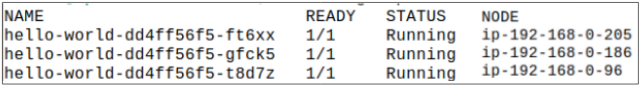

By this logic, if we were to update our previous Deployment spec and add a Toleration to it, we should be able to get one of the replica Pods onto Worker Node 3.

Step 4: Update the Deployment spec by adding a Toleration to it.

Step 5: Use the updated manifest to re-do the Deployment.

I write to remember and if in the process, I can help someone learn about Containers, Orchestration (Docker Compose, Kubernetes), GitOps, DevSecOps, VR/AR, Architecture, and Data Management, that is just icing on the cake.