GitOps w/ FluxCD: Configuration Management.

Software systems are birthed in a development environment, validated in a testing/QA environment, demo-ed for users in a staging environment, and made accessible to the public in a production environment.

Ideally, as the software systems pass through the different environments, no human intervention is required. What was working and acceptable in the development (or the test/QA) environment should automatically work in all downstream scenarios. This, unfortunately, does not happen because that which is considered good for the goose in dev and QA may not be good for it in staging and production. This act of managing system configurations across environments (also lovingly called Configuration Management) is achieved using tools like Ansible and Terraform. Kustomize is a Configuration Management tool just like Ansible but is dedicated to K8s and plays a crucial role in automating the application of environment-specific cluster configuration values.

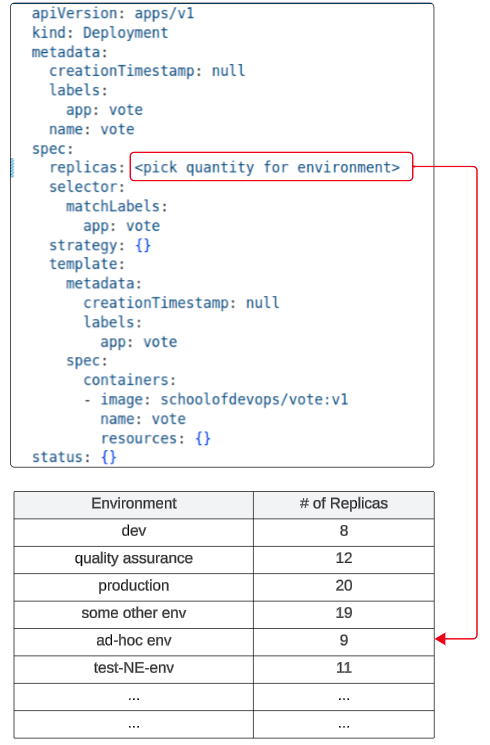

For example...

...assume you are engineering a cluster (and managing it) across three different environments: development, quality assurance, and production.

As a typical case, development is always the least difficult to set up and manage. As the cluster moves from development to quality assurance to production, overall specs get more dynamic ("to ensure system reliability, for every Pod launched in dev, I want 10 Pod launched in quality assurance and 15 in production") or very precise ("dev used v1.0.1 of the container image but quality assurance and production will use v1.0.2 and v1.0.0 till further notice").

As the number of environments keeps increasing, the need to be cautious about not fat-fingering the incorrect configuration value could result in wasted time and definite heartache.

Here Kustomize can be very useful.

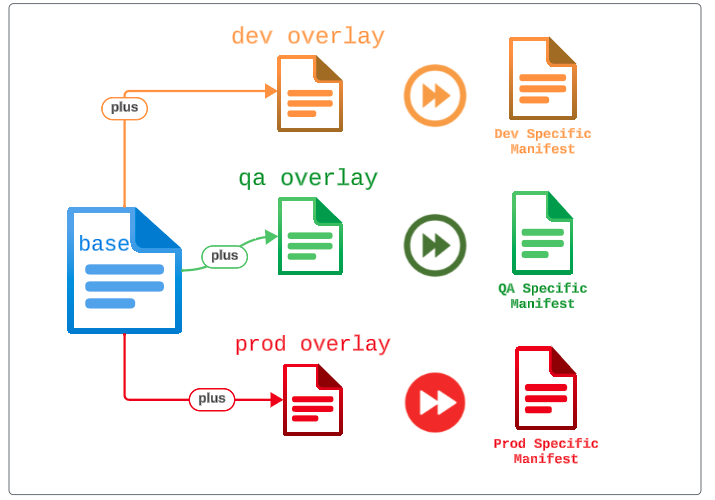

Kustomize can manage both standard and specific configuration value injection and employs the concepts of base configurations (system values that do not change across environments) and overlays (values that are specific to each environment) to do so.

Kustomize will merge the base configurations with the overlays for each environment to create a manifest.

As always, let's see this in a demo.

Demo: Configuration Management for K8s clusters w/ Kustomize.

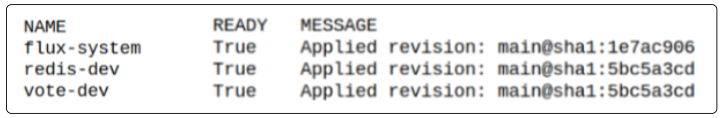

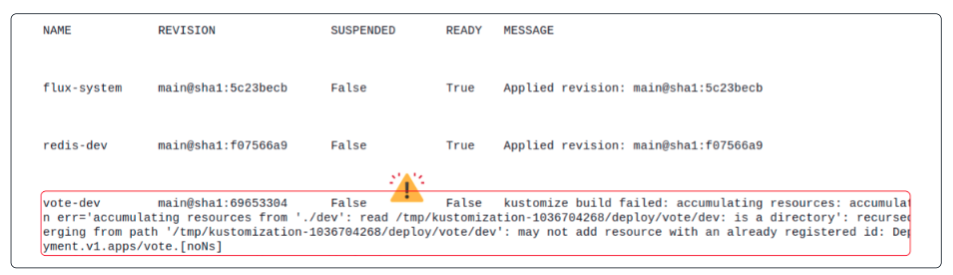

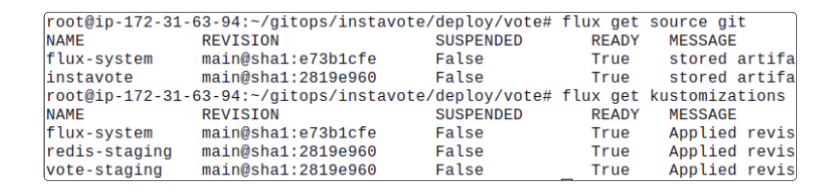

Before we proceed, let's check the current state of Kustomizations.

All Kustomizations are active and working as expected.

1. Kustomize binary

Kustomize expects all base and overlays to be arranged in a certain structure.

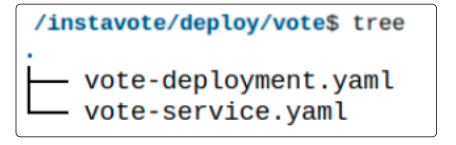

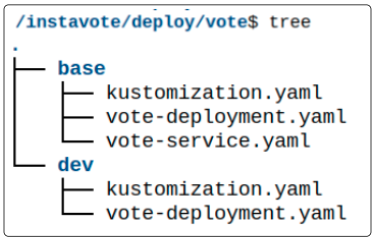

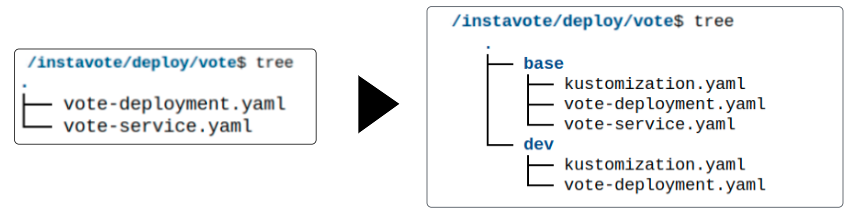

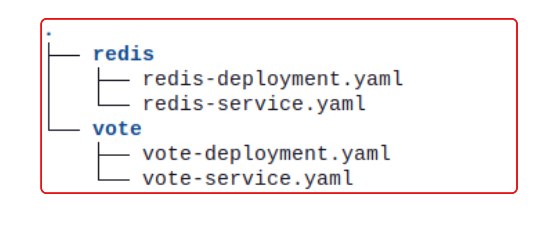

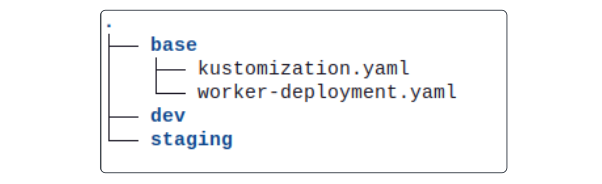

So far, over the course of the last three articles, we arranged our manifests as shown below.

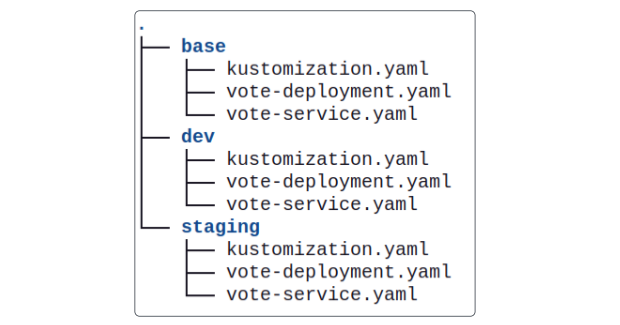

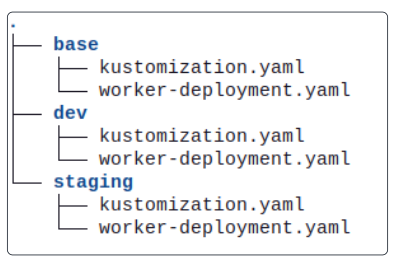

Kustomize, however, expects the following type of folder structure.

Therefore, we must add two folders and some additional YAML's to satisfy Kustomize's expectations.

Let's work our way through the basic setup for Kustomize.

Step 1: Install Kustomize

curl -s "https://raw.githubusercontent.com/kubernetes-sigs/kustomize/master/hack/install_kustomize.sh" | bash

# Move Kustomize binary to local path

mv kustomize /usr/local/bin/

# Check Kustomize was installed using which

which kustomize

# properly installed kustomize will print a list of help topicsStep 2: Make a base folder inside vote.

# Position context at root of vote folder

mkdir base

# Move both vote-deployment.yaml and vote-service.yaml to base

git mv *.yaml base/

Step 3: Generate a base kustomization file.

# Change to base directory

cd base

# Generate base kustomization file

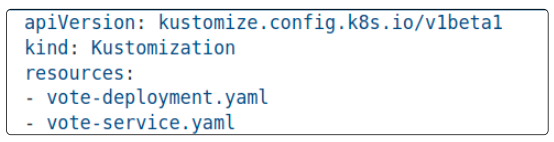

kustomize create --autodetectKustomize will notice two files in the folder (vote-deployment.yaml and vote-service.yaml) and, using this information, create a kustomization.yaml file.

Step 4: Create an overlay folder inside vote and call it dev.

# Make a new folder inside vote and name it dev

#Assuming you are not at the root of the vote directory

cd ../

#Once at root of vote folder...

mkdir dev

# Navigate to the dev folder

cd dev

# Create a kustomization.yaml file here

cat > kustomization.yaml

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- ../base

patchesStrategicMerge:

- vote-deployment.yaml

# Save file by pressing CTRL+D

# Now create the patch file for vote-deployment.yaml in dev

cat > vote-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: vote

name: vote

spec:

replicas: 3

template:

spec:

- name: vote

containers:

- image: schoolofdevops/vote:v1

name: vote

# Save file using CTRL+D

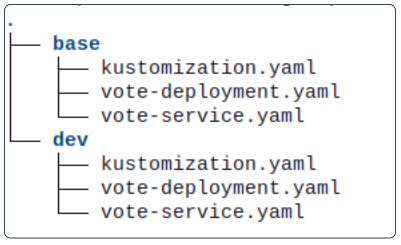

*Copy the vote-service.yaml in the base folder to the dev folder.

At this point, the vote directory should resemble the image below.

Step 4: Save all changes to GitHub Instavote repo.

git add *

git commit -m "Added first overlay to vote"

git push origin mainConfirm the check-in by eyeballing the instavote repo on GitHub

Allowing time for the reconciliation to finish, check the health of the Kustomizations created so far.

flux get kustomizations

The vote-dev Kustomization was working fine, but something we changed has introduced an error.

Per the error message in the image, the obvious culprit is the vote-dev Kustomization.

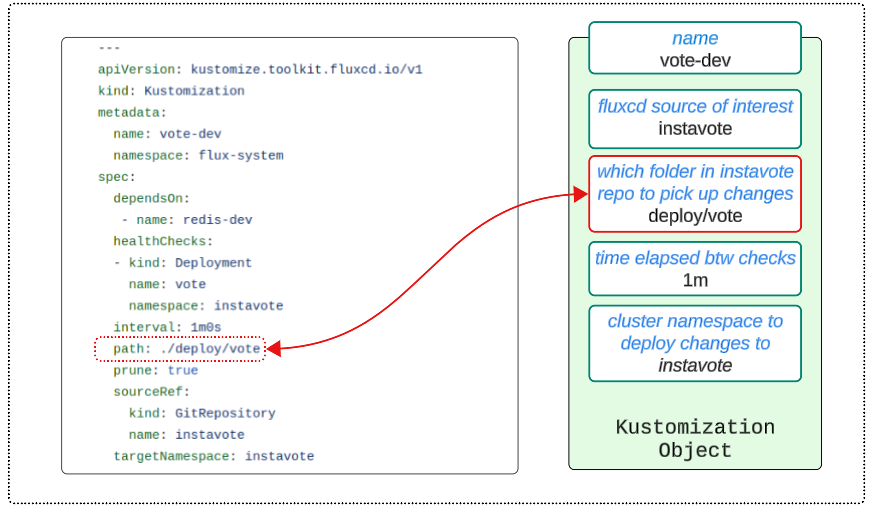

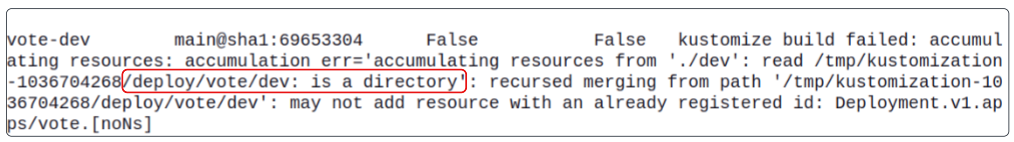

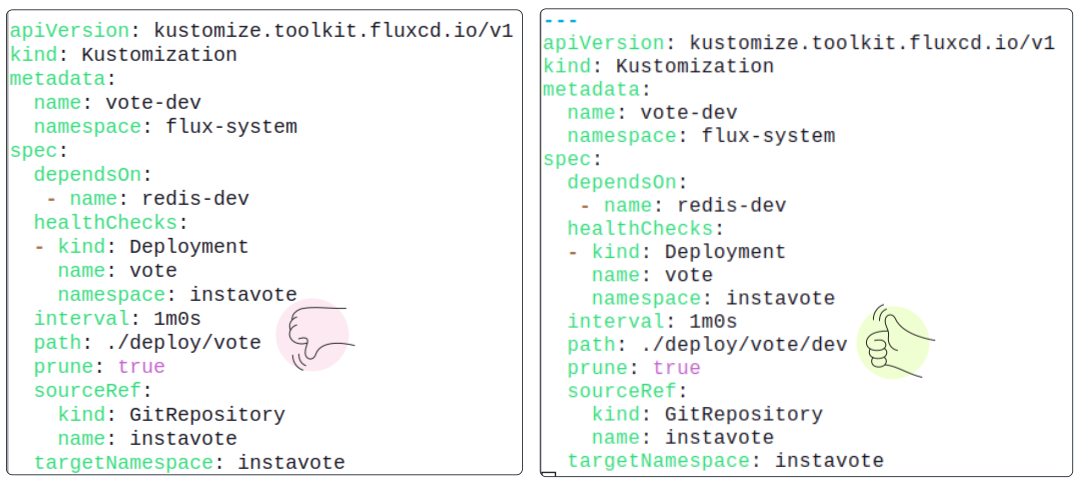

Kustomization/vote-dev is looking at the YAML contents of the folder /deploy/vote in the instavote repository. The error message, however, is complaining that '/deploy/vote/dev: is a directory.'

As per our investigation, Kustomization/vote-dev is picking up YAML files from the /deploy/vote folder in the instavote GitHub repo while the error message says (and I paraphrase)

Kustomize build failed because there is no YAML file directly inside the deploy/vote folder. Instead, inside the /deploy/vote folder is another folder called dev, and there are no YAML manifests to work on in this folder.

Is the error message just gibberish? No harm in looking up the folder structure for instavote/deploy on GitHub to confirm.

Suddenly, the root cause of the error becomes obvious. As part of this article, we reorganized the /deploy/vote folder and added a dev sub-folder. It is inside dev where our YAML manifests have been saved. The fix to this error is straightforward. We have to modify the value of the path attribute in the vote-dev-kustomization.yaml file.

Step 5: Edit vote-dev-kustomization.yaml file.

The vote-dev-kustomization.yaml file has to be modified, and the path where it looks for manifests has to be updated.

That was quite a journey, but I still don't understand the benefits of using Kustomize.

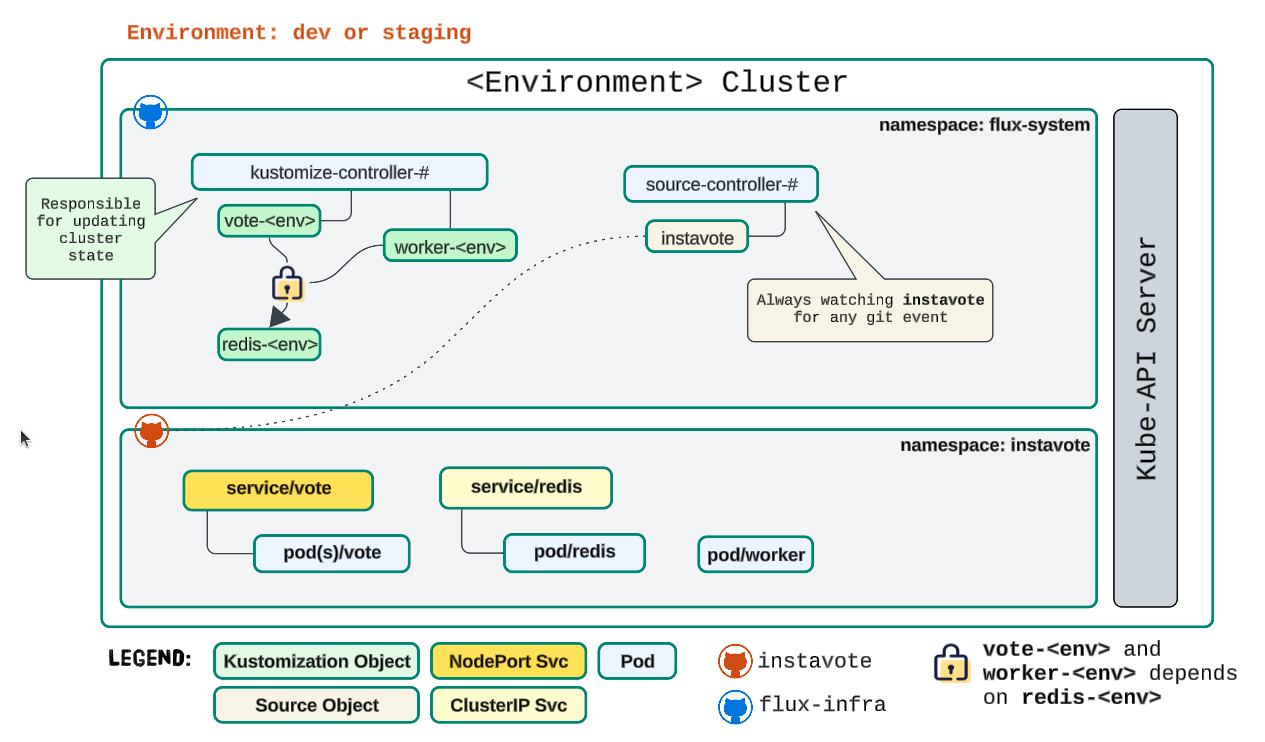

To truly highlight the convenience Kustomize provides us, we have to set up multiple demo clusters (one for dev and perhaps another for staging) and use the base-overlay approach to showcase how we can easily automate the setting of configuration values across different cluster-environment combinations.

For documentation, go to the official GCP site.

Step 6: Bootstrap the staging cluster for GitOps/FluxCD.

Bootstrapping any cluster with FluxCD follows a standard approach (detailed in this article).

# Auto-generated Context Names are long and hard to type.

# Consider shortening them by using the commands provided below

# To list ALL contexts

kubectl config get-contexts

# To change name of a context

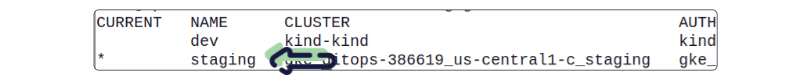

kubectl config rename-context <old name> <new name>For this article, the following two cluster contexts are being used.

- Create a new namespace (instavote) in the staging context

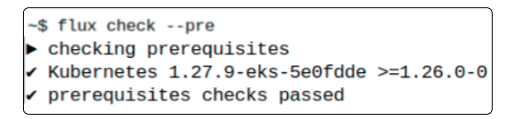

kubectl create ns instavote- Run a pre-bootstrapping check to confirm prerequisites are already in place.

- Run the bootstrapping command using FluxCD CLI.

flux bootstrap github

--owner=$GIT_USER \

--repository=flux-infra \

--branch=main \

--path=./clusters/staging \

--personal \

--network-policy=false \

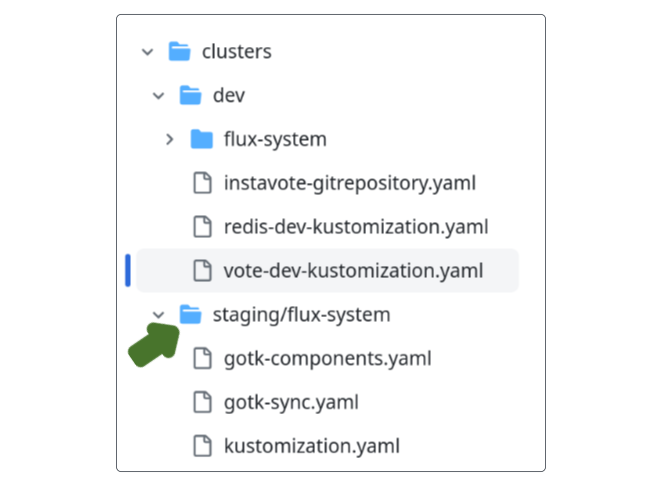

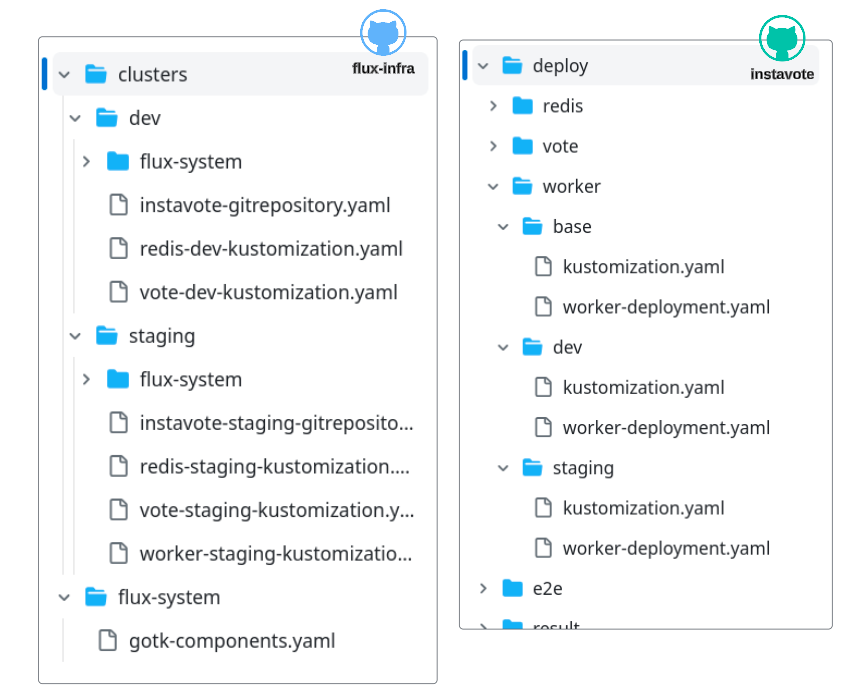

--token-auth- Notice the --path attribute and its assigned value. Earlier, in the first article in this series, the value for --path was set to ./clusters/dev. We should go to our flux-infra repo and confirm that /clusters has a /dev and a /staging folder.

- The --components attribute has also been removed, allowing all default components to get installed.

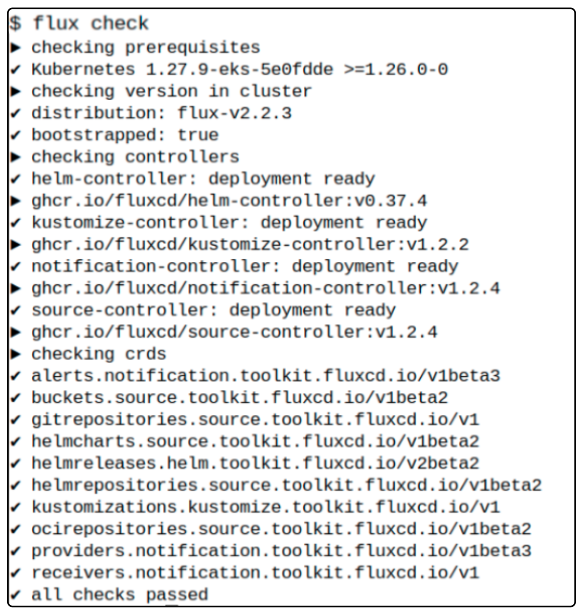

- Run flux check and check installed components.

- Navigate to the flux-infra/clusters/ folder and copy the YAML files from /dev/ to /staging.

# change directory to flux-infra/clusters.

cp dev/instavote-gitrepository.yaml staging/instavote-staging-gitrepository.yaml

cp cp dev/redis-dev-kustomization.yaml staging/redis-staging-kustomization.yaml

cp dev/vote-dev-kustomization.yaml staging/vote-staging-kustomization.yaml

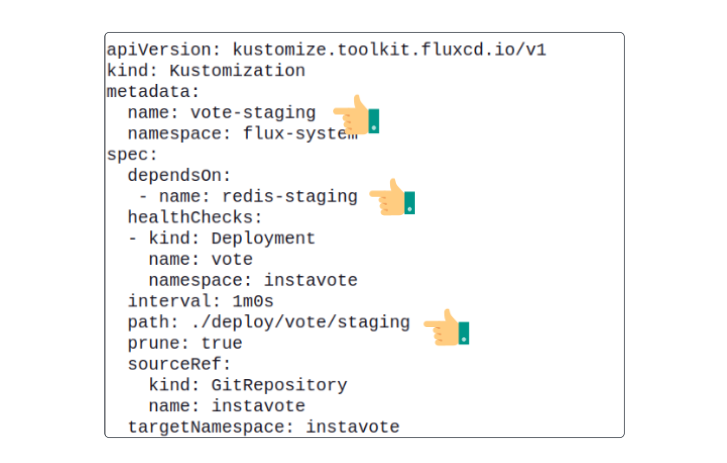

- Edit the contents of staging/vote-staging-kustomization.yaml.

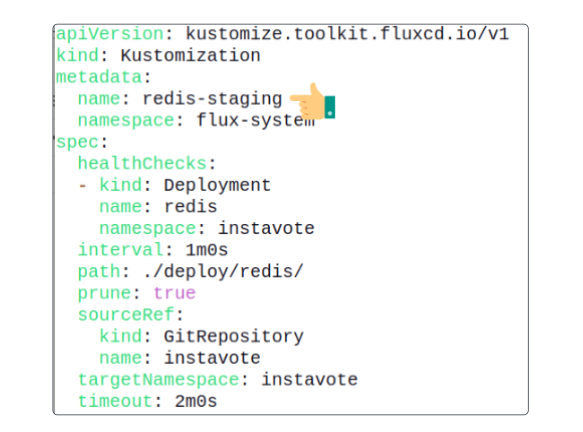

- Edit the contents of staging/redis-staging-kustomization.yaml.

- Add the new folder /cluster/staging to the flux-infra GitHub repository.

After allowing a period for automatic reconciliation, list the GitRepository Source and vote-staging and redis-staging Kustomizations.

flux get source git

flux get kustomizations- Navigate to /instavote/deploy/vote/ folder and execute the following series of commands.

# Back in /deploy/vote

mkdir staging

cp dev/*.yaml staging/

- Without any changes, check-in the new vote/staging folder into GitHub.

- Allowing for a period of reconciliation, list the Kustomizations and GitRepository Source.

flux get source git

flux get kustomizations

The moment we've been waiting for has finally arrived; some examples of how Kustomize manages cluster states across multiple environments follow.

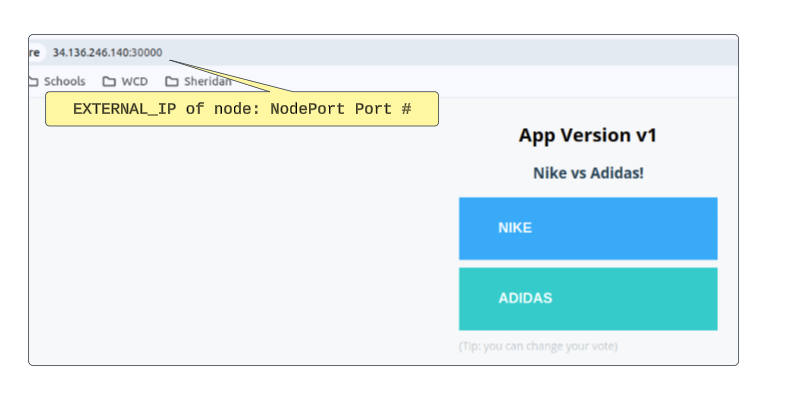

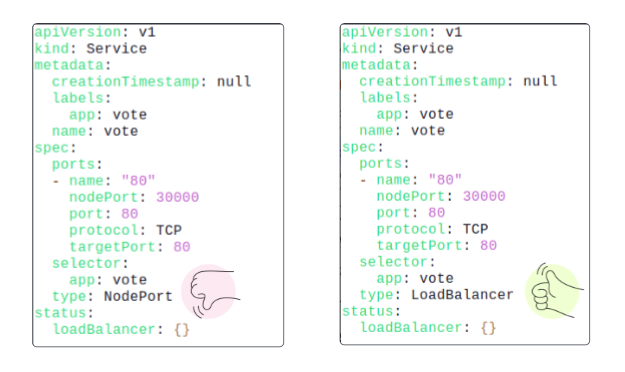

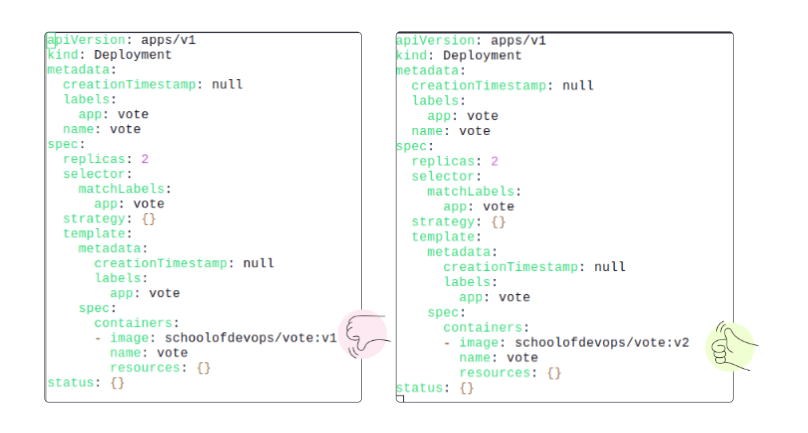

Demo # 1: Changing Vote Service in Staging from NodePort to LoadBalancer (only for GCP-based staging cluster) and/or changing the Vote UI version from 1 to 2.

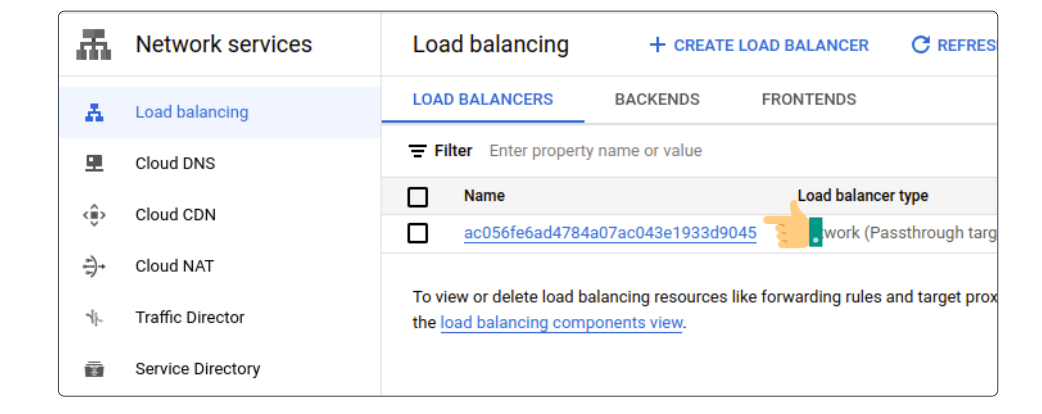

We assume you used GCP and have no Load Balancers set up when attempting this exercise.

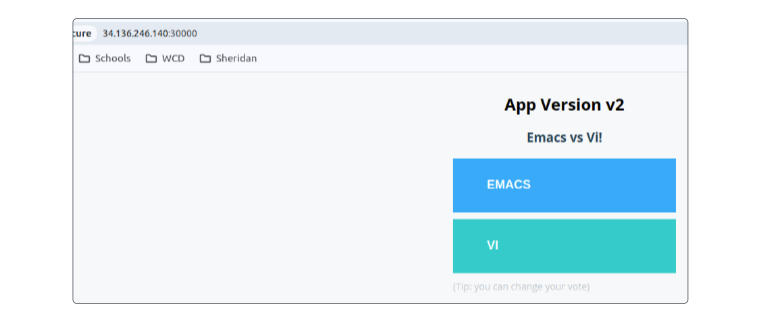

- Test the vote UI app by loading it into a browser using a public-facing IP address (which can be found using the steps provided below)

# Find the names of nodes hosting vote Pods under the NODE column

kubectl get pods -o wide -n instavote

# Find the external IP of one of the nodes under EXTERNAL-IP column

kubectl get node <node name> -o wide

# Find the Port # assigned to the vote NodePort Service

kubectl get svc vote -n instavote

- Edit the instavote/deploy/vote/staging/vote-service.yaml file

- Finally, edit the instavote/deploy/vote/staging/vote-deployment.yaml file

- Check the modified files into GitHub, and wait for the period of reconciliation to expire.

Refresh the browser tab to update the UI.

The vote Service was also converted from a NodePort to a LoadBalancer. Since we had confirmed no LoadBalancers existed before this exercise, it is safe to suggest our manifest was responsible.

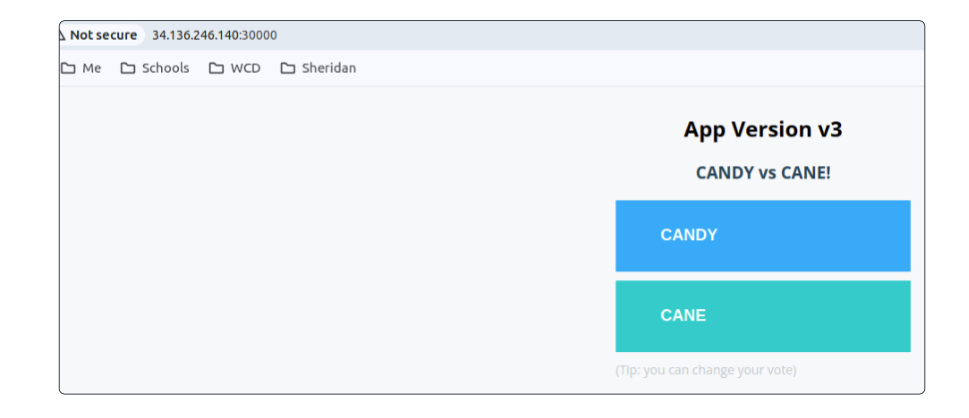

Demo # 2: Changing the options on the vote UI in staging

The staging vote UI shows this.

- Confirm the context is set to staging.

- Edit the /deploy/vote/staging/kustomization.yaml file

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- ../base

patchesStrategicMerge:

- vote-deployment.yaml

- vote-service.yaml

# Add this segment

configMapGenerator:

- name: vote

literals:

- OPTION_A=CANDY

- OPTION_B=CANE

- Edit the /deploy/vote/staging/vote-deployment.yaml file

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: vote

name: vote

spec:

replicas: 2

selector:

matchLabels:

app: vote

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: vote

spec:

containers:

- image: schoolofdevops/vote:v3

name: vote

# *** Add this ***

envFrom:

- configMapRef:

name: vote

optional: true

resources: {}

status: {}

- After the repo push and allowing for a minute to reconcile, refresh the browser screen (pointing to the staging cluster)

Don't let the simplicity of these demos fool you. A little imagination here will go a long way. Using this approach makes configuring multiple environments simple and manageable. You could add /deploy/vote/qa or /deploy/vote/prod and manage deployment across multiple environments in one place.

Before we continue...

we will set up the folder structure for the Worker application. The steps to follow are the same as those for previous applications.

# Ensure context is staging

kubectl config current-context

# If not staging...

kubectl config use-context staging

# Go to the root of /instavote/deploy and make a new worker folder

mkdir worker

# Change to worker

cd worker

# Create 3 folders inside worker: base, dev and staging

mkdir base && mkdir dev && mkdir staging

# Change to base

cd base

# Generate the Deployment manifest for worker in dry-run mode

kubectl create deployment worker \

--image schoolofdevops/worker:latest \

-n instavote --dry-run -o yaml

The resulting YAML is shown below.

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: worker

name: worker

namespace: instavote

spec:

replicas: 1

selector:

matchLabels:

app: worker

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: worker

spec:

containers:

- image: schoolofdevops/worker:latest

name: worker

resources: {}

status: {}

- Run the same command again; pipe the output into a worker-deployment.yaml file.

kubectl create deployment worker \

--image schoolofdevops/worker:latest \

-n instavote --dry-run -o yaml > worker-deployment.yaml- Create a Kustomization manifest.

kustomize create --autodetectPrint the structure of /deploy/worker/.

- Copy base/*.yaml to dev/ and staging/.

cp -r base/worker-deployment.yaml dev/

cp -r base/worker-deployment.yaml.yaml staging/- Change to the dev folder and create a Kustomization.

kustomize create --autodetect- Repeat the same step for the staging folder

# Assuming you are in the worker/dev folder, navigate to the staging folder

cd ..

cd staging

# Run kustomize create

kustomize create --autodetect

- Check new code folders/files in the instavote repo.

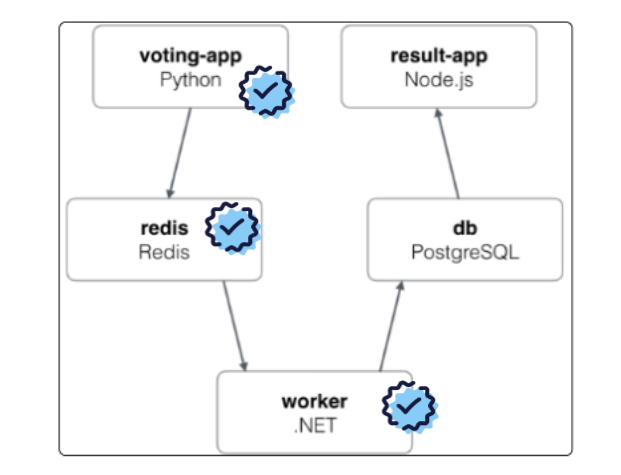

Architecturally Speaking

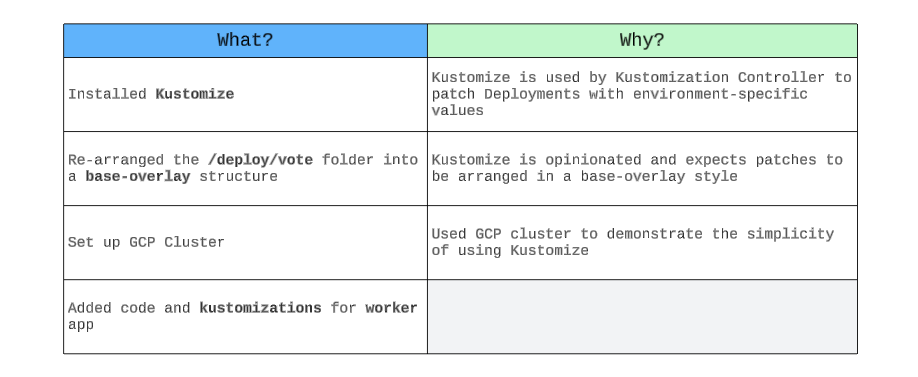

Summary: What and Why?

Instavote Application Components Deployed So Far

GitHub Folder Structure

I write to remember, and if, in the process, I can help someone learn about Containers, Orchestration (Docker Compose, Kubernetes), GitOps, DevSecOps, VR/AR, Architecture, and Data Management, that is just icing on the cake.