Data Integrations: Not as 'easy' as we think?

Let's start with a basic definition of Data Integration

Data integration is the process of combining data from multiple sources to assist analysis, generate insights and making informed decisions for us.

Data concepts are everywhere; It's getting hard to differentiate between them.

Agreed.

I have heard data integrations being described as part of a data management strategy (which I agree with) and as the precursor to data governance (which is an anti-pattern in my view). Such experiences, and countless others not mentioned here, made me realize that there might be a real use for a quick walkthrough of defining data concepts.

Data Concept # 1: Data Warehousing

- Process of collecting, storing, and managing large volumes of data from various sources in a centralized repository.

- Requires data integrations.

- Includes activities like data modelling, indexing, and query optimization to enable efficient data retrieval and analysis.

Data Concept # 2: Data Migration

- Transfer of data from one system or storage location to another.

- May require data integrations to assist migration.

Data Concept # 3: Data Virtualization

- Allows organizations to access, aggregate, and manipulate data from different sources without physical data movement or storage.

- Does not require traditional data integration techniques.

Data Concept # 4: Data Federation

- Strongly related to Data Virtualization.

- Unifies the view of data from multiple sources without the need to move or store the data in a central storage.

Data Concept # 5:Data Synchronization

- Maintains consistency and accuracy across multiple copies of data stored in different locations or systems.

- Ensures changes to one data source are automatically reflected in all other copies.

Data Concept # 6: Data Quality Management

- The practice of managing accuracy, consistency, and reliability of data throughout its life cycle.

- Involves data cleansing, deduplication, validation, and enrichment.

Data Concept # 7: Data Vault

- An approach to designing enterprise data warehouses which combines the best aspects of third normal form (3NF), Data Sources and Types, dimensional modelling, and other design principles to create a flexible, scalable, and adaptable data architecture that can accommodate rapidly changing business requirements and easily integrate new data sources.

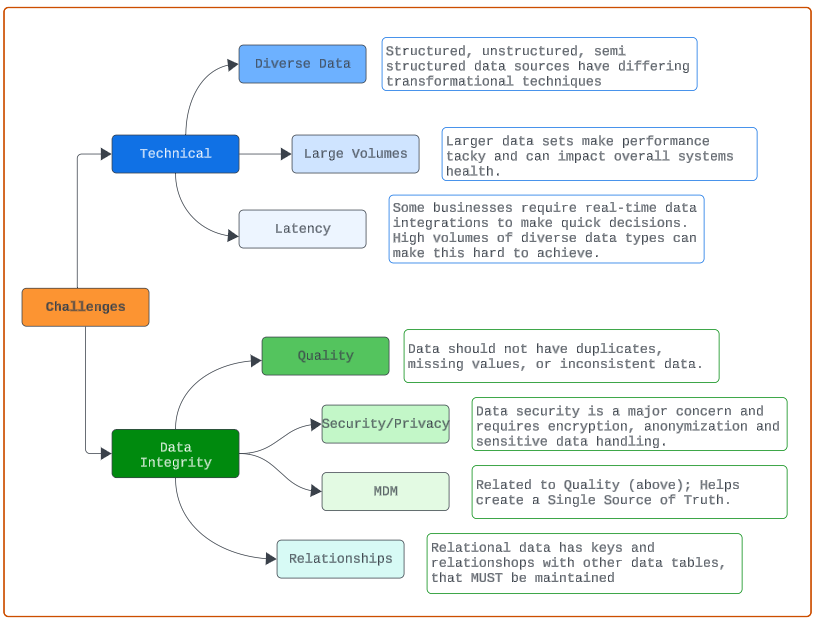

Why is Data Integration Challenging?

Because it requires a keen sense of technical expertise with an equally sharp focus on data integrity (right data, right time, right person).

Data Integration Techniques

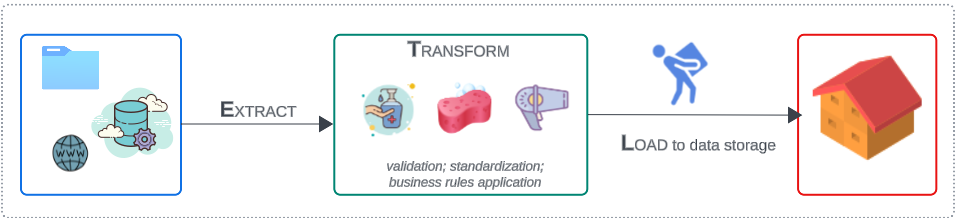

Technique # 1: Extract, Transform, Load (ETL)

- Extract the data from wherever it is stored.

- Tools connect to these sources using connectors or APIs and read the data.

- Transformation is the most complex and, arguably, the most important step in ETL.

- Data is transformed into a suitable format/model for analysis.

- Includes data cleaning, normalization, enrichment, and filtering.

- Loading is putting the transformed data into a target data storage.

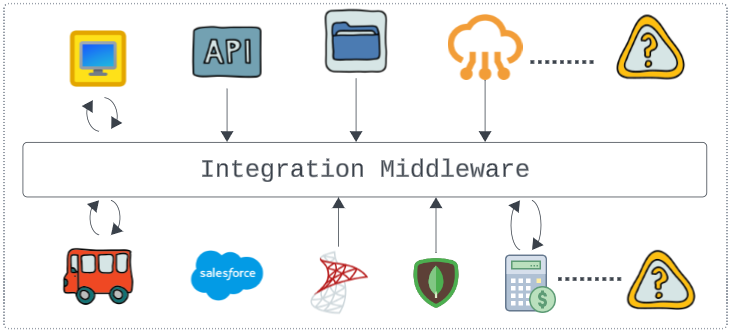

Technique # 2: Integration Middleware

Integration middleware is a 'construction artist' making 'bridges' between applications, databases, platforms, APIs, shared folders and other data storages. This middleware, along with connecting to sources to 'feed off their data', can also apply data quality, security and business rules. Examples of integration middleware are message queues, enterprise service buses and data orchestration tools like Azure Data Factory or AWS Glue.

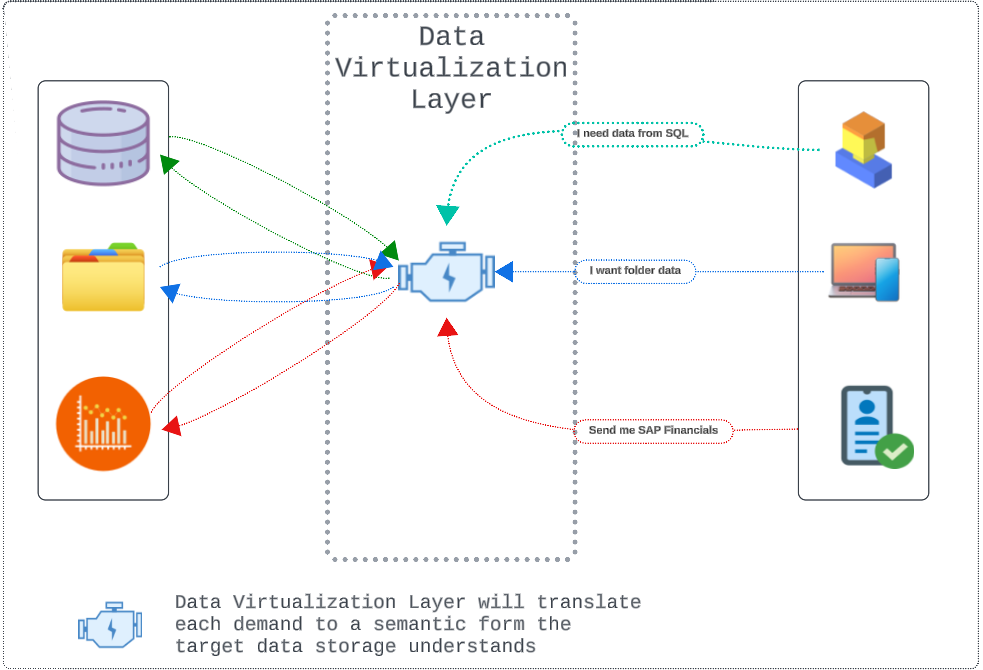

Technique # 3: Data Virtualization

Think of a Data Mart. A data mart

is a specialized subset of a data warehouse, designed to focus on a specific business line, department, or team. Think of it as a mini data warehouse that contains only the relevant data needed by a particular group within an organization.

Data virtualization tools are typically configured to support data extraction needs from multiple data sources (of differing types), from a single virtualization plane.

How Does One Choose the Best Data Integration Technique/Tool?

Short answer: after a thorough understanding of your needs.

Always consider:

- System Processing Capabilities

- Does the tool have data governance features?

- Does the tool have strong security built-in?

- Does the tool provide data enrichment and transformation?

- Business Needs

- Is the tool the best choice for achieving outcomes?

- Total Cost of Ownership (includes licensing, maintenance, consulting and training costs)

- Does the team know much about the tool/technique?

- If no,

- Is there a plan to train the team?

- User Experience

- Is the tool's experience designed with intuitiveness and ease of use?

- Technology/Tools Adaptiveness

- Is the tool scalable? It may be able to manage data volumes currently, but will it hold its own when volumes increase?

- Is the tool supporting different data formats and transformation rules?

- Does the tool integrate with various sources and platforms?

I write to remember, and if, in the process, I can help someone learn about Containers, Orchestration (Docker Compose, Kubernetes), GitOps, DevSecOps, VR/AR, Architecture, and Data Management, that is just icing on the cake.